29 March 2024

Request your demo of the Sigrid® | Software Assurance Platform:

8 min read

Every team works in different ways, and the way in which teams do code reviews is no exception.

This is because there’s not a universally agreed-upon way of doing effective code reviews, so while the specifics of each team’s process might be different, the underlying motivations to actually perform code reviews are the same.

We will see what the motivations are behind code reviews, how they manifest in practice, how they fit into the normal workflow for developers, and, finally, we will detail their most important aspects and how that process is conducted at SIG.

Code reviews serve various purposes, some of more technical nature, others more closely tied to the human and teamwork factor of writing code.

By making sure that every PR is a team effort, the responsibility is shared between the person who wrote the code and the person who is responsible for reviewing it, which means that every change will be reviewed and approved by at least two people. As stated by Eric Raymond: “given enough eyeballs all bugs are shallow”. Besides this there are other positive implications for the overall codebase health:

By guaranteeing the correctness and completeness of the implementation, as well as additional non-functional requirements, like test coverage not dropping and no regressions being introduced, we can guarantee via a team effort that the quality of the new code being written is up to the standards of the existing code.

Obviously, when there is a deliberate effort to maintain quality, the maintainability improves as a result and the code is easier to maintain, extend and reason about.

Another very important benefit, albeit less tangible, is that it signals to new developers joining the team that quality is at the forefront and taken seriously. It makes them more aware of these aspects so they can quickly level up to the high-quality standards that the team holds itself against; aka code must be at 4 stars, indicating that its quality is above the industry average and signaling to other developers that the code is easy to maintain and extend.

The main focus of a code review is a process of (usually) asynchronous collaboration between the reviewer and the person who wrote the code. The reviewer will go over the changes put up for review and, by following a structured approach that is usually a combination of the industry’s best practices as well as the specific practices within your team or organization, will create small comments that need to be discussed between both parties and potentially resolved either by changing the code or reaching an agreement until no new comments are added.

Once the PR has been reviewed and an agreement has been reached, the code can then be merged into production. But what exactly is addressed during a code review?

The process at SIG usually is a mix of addressing both high-level concerns about the business logic as well as enforcing code style, structure, and conventions in such a way that when all comments have been addressed, the PR is deemed to be both functionally and non-functionally correct, complete and in line with the existing codebase.

This review process, while not (always) fully structured, is a very efficient one in flushing out critical issues with the PR with respect to the business domain as well as performance, maintainability, consistency, readability, reliability, and security.

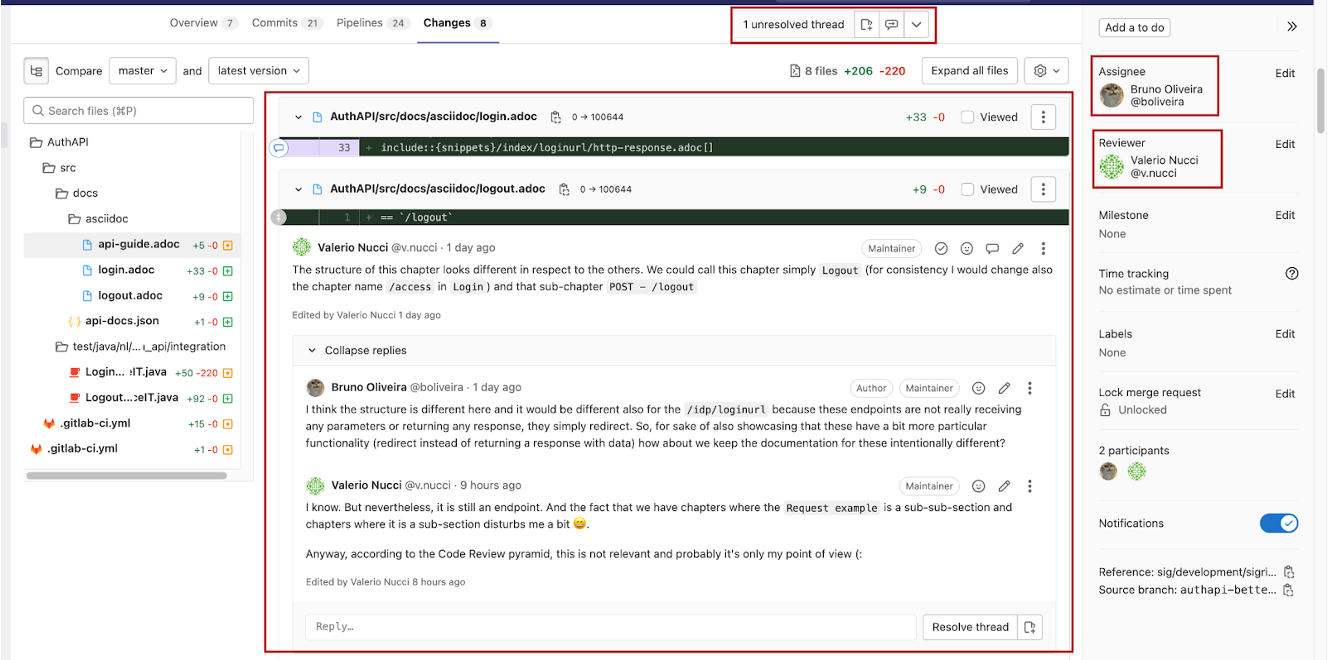

Each company and team can use their own set of tools and processes to conduct a code review, but at SIG we rely on Gitlab to assign reviewers, start discussions on a merge request, and then the review is done directly in the browser, via the Gitlab UI. This approach enables us to manage the code, merge requests, branches, and code review processes in a centralized location which increases developer efficiency.

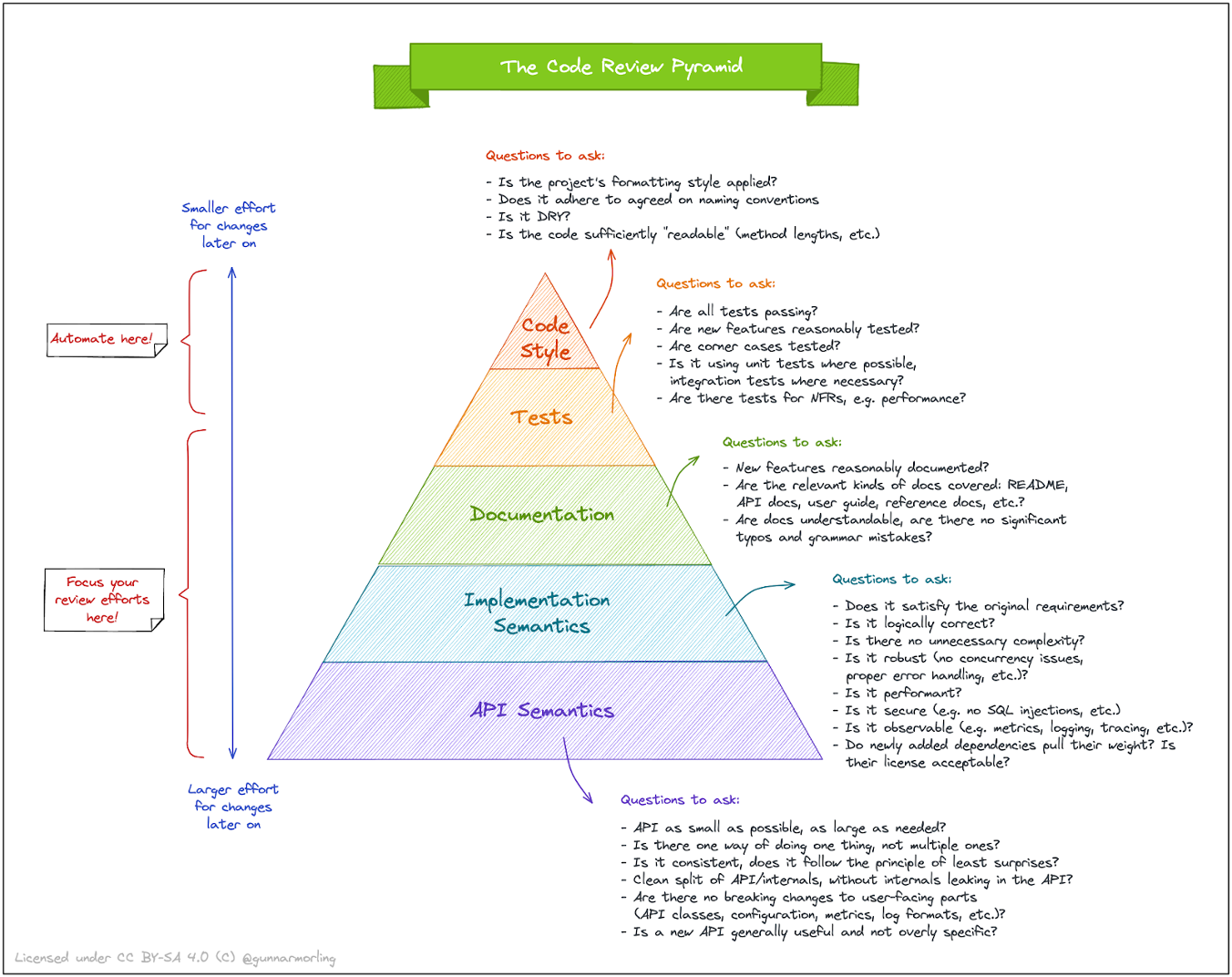

A very insightful view on the importance of looking at several different levels of abstraction in a code review can be seen in the test review pyramid below, which we have recently adopted as part of our process:

References: Gunnar Morling, https://www.morling.dev/blog/the-code-review-pyramid/

We can see that both API and implementation semantics, i.e. verifying that the actual implementation of a given ticket follows the correct business logic and behaves as intended, is where most of the efforts must be placed, as these are usually the more tricky parts to get right and, as such, the part that is most important to have reviewed by colleagues with more domain knowledge.

As for tests and code style, these aspects, while important, can be largely automated, and we do that at SIG by having a CI/CD pipeline in place.

As described earlier, we use Gitlab to conduct our code review process. Look at this screenshot of a code review in the Gitlab UI below:

We can see there is an assignee – the person responsible for producing the work that will be the actual merge request, and an assigned reviewer which is the person responsible for performing the review.

We can also see the ongoing discussion directly in the Gitlab UI with some considerations from both people, and the most important aspect is to always keep interactions friendly and focused on constructive criticism so that both the author and the reviewer get the most out of it.

Once an agreement has been reached, the unresolved review thread can be marked as resolved, and afterward, the work can be merged into production once the reviewer approves it. Note that we require at least one person besides the author of the changes to approve them to ensure that the review process is strictly followed.

It’s also important to mention that not all merge requests are equally impactful or critical. The difference in experience between the author and reviewer can range from significant to none, and, as such, we adapt our code review process by adhering to the following guidelines:

We consider a certain merge request to be reviewed after the reviewer approves the merge request, which for us it’s done via the UI of Gitlab.

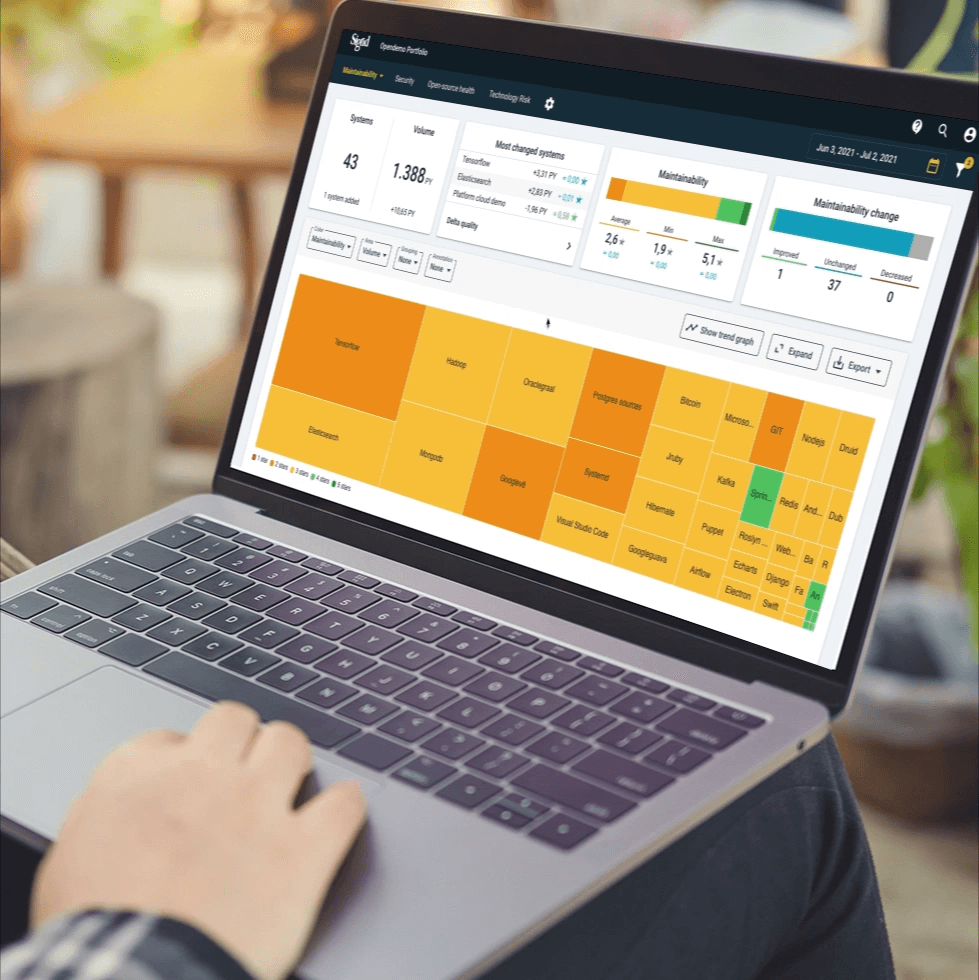

One particularly interesting aspect of the code review process at SIG is how we leverage our own tooling to aid us in code reviews. Specifically, we run Sigrid CI in our own pipelines, which allows us to use our own models to ensure that the code that is being reviewed and written conforms to the same quality standards that we uphold our customers to.

As a nice example, after extending the functionality of a given class we had code that looked okay when reviewed in isolation within the context of the existing merge request, but our own Sigrid CI pointed out that we had inadvertently introduced a small duplication with code from a different, untouched package. This allowed the author of the merge request to refactor the code such that both call sites now leveraged a call to a centralized location accessible from a common package. So, not only the quality of the code within the context of a given merge request is looked at, but also the quality of the project as a whole.

As a plan for the future, as our team keeps growing, we are considering looking into tools that can be hooked up to our own build pipelines with as little configuration as possible, so they can run by default. This will increase the assurance of safety and correctness of our code and prevent a whole class of bugs from making their way past the build pipeline.

At SIG, we are fans of relying on our own tools for improving our own workflows, and just as we have adopted Sigrid CI internally before releasing it to the general public, we will keep “dogfooding” by improving and using our own tools as means to improve our code reviews.

Since our stack within the backend team is mostly Java-based, we are considering looking into tools such as Errorprone in the future.

Obviously, code reviews are based on collaboration between people, and often interpersonal conflicts can arise during this process. While these conflicts, when left unattended, can hinder the process and affect the team’s cohesion and morale when managed properly, they can actually empower teams and individuals to become better, as seen in this research paper.

There’s also research worth reading about ensuring that specific aspects of software development are addressed with intent, like an extra focus on security vulnerabilities during a review increasing the likelihood of such vulnerabilities being found early.

We looked at the role that code reviews play in ensuring that code quality is at the forefront of the work done by any software development team, and, in particular, we took a deep dive into how we approach this process at SIG, namely by relying on GitLab for all the work related to both merge requests, creating branches, creating pull requests and both start as well as tracking and signing off on a code review.

We showcased a recent concept that surfaced in the software development scene, the code review pyramid, and how it showcases which software development aspects are more important to focus on during the process of reviewing code.

Finally, there are also other companies where code reviews are not done at all, and reviews are done on a more ad-hoc basis, only when deemed necessary which is a nice interesting contrast to read about.

Thanks to my colleague Mircea Cadariu for reviewing this post and suggesting improvements with respect to our future work and regarding which aspects are important for our team when actually performing code reviews.

We'll keep you posted on the latest news, events, and publications.