29 March 2024

Request your demo of the Sigrid® | Software Assurance Platform:

7 mins read

Written by: Martin Boerman

Last weekend I participated, together with fifteen co-workers in the world’s largest online hackathon: Odyssey Momentum. We continuously monitored code quality throughout the event, live-tracking source code as it was being developed by the more than 2000 hackathon participants. We leveraged our own Sigrid platform for this, connecting directly with Github repositories* of the development teams.

We found that maintainability and security risks surface easily, when the main objective is functionality. Of course we appreciate the fact that any hackathon is all about making something that at least works when pitched to the jury. But still, if a team is capable of delivering a functioning prototype, whilst at the same time keeping up high quality standards and avoiding security risks, then that indicates intrinsic maturity of the team.

Chasing quality in a virtual world

For the first time – slightly driven by the ongoing pandemic – this hackathon took place in a unique online virtual arena. A game environment of sorts. Momentum brought together over 2.000 participants from all over the world, working in more than 100 teams to solve challenges relevant to the 21st century. My colleagues and I joined not as developers, but as a ‘Code Jedis’ to spread the word of software quality and to have ‘Grill Sessions’ with teams discussing the technical quality of their work.

During this event SIG measured the build quality and security of applications with two main goals:

It was impressive to be part of this online hackathon: the atmosphere was unique with so many participants working remotely for 49 hours straight, with little to no sleep. Over the weekend we got curious. What is the impact of this pressure cooker coding endeavour on code quality? Will code quality be negatively affected by the speed and competition that defines hackathons? And what about adhering to security practices within such short deadlines?

In just 2 days, we accumulated metrics on 89 systems, built with 14 different technologies, and with a total volume of 17 person years (the equivalent of roughly 140.000 lines of code).

So what did we find?

Let’s dive a bit deeper into Maintainability, before we discuss third party libraries in more detail.

Writing maintainable code under pressure

The SIG Maintainability benchmark rates systems against others in the industry. Our model expresses Maintainability in stars ranging 1 to 5. Rating 3 stars means being within the market average range. Scoring 4 stars is considered above market average, and having 5 stars is reserved to a small upper section of about 5% of the benchmark.

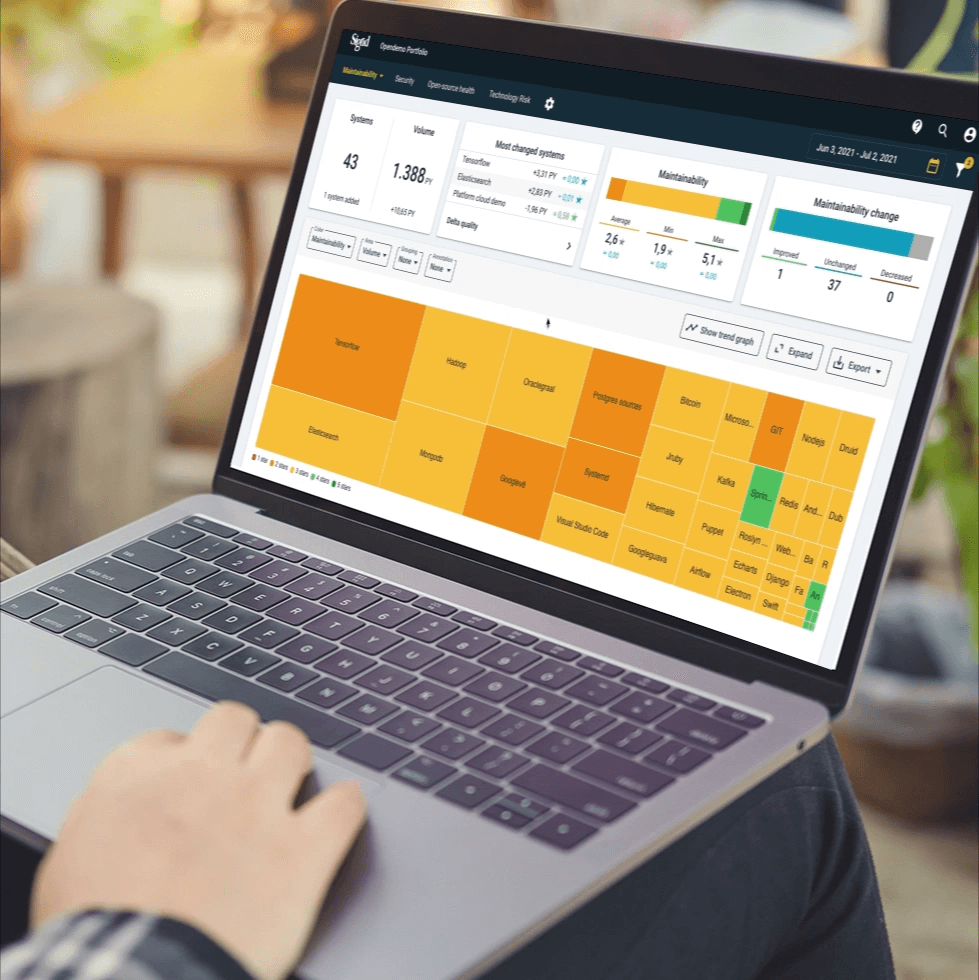

^Screenshot of Sigrid, showing how teams are performing on Maintainability

^Screenshot of Sigrid, showing how teams are performing on Maintainability

3 Stars and below show up yellow and orange in the picture above. However, we see a lot of (dark) green, which indicates above market average scores. That is a very good result of course!

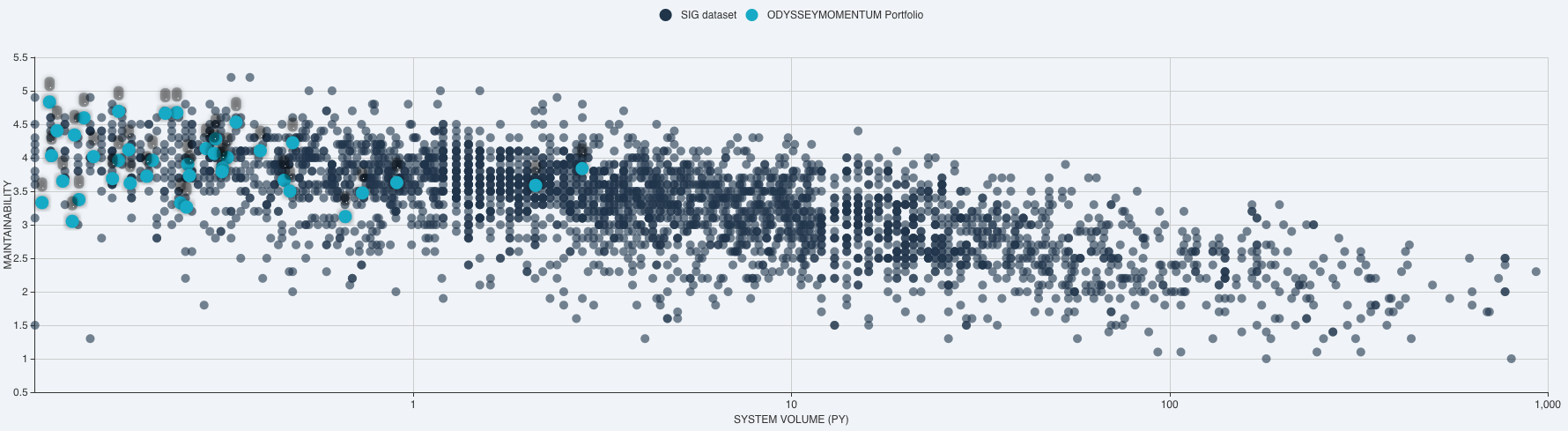

But, with just 2 days of coding worth of system volume, some aspects in our models rated high simply because the codebases were still rather small. Consider the graph below to see a comparison with our industry benchmark for Maintainability. Compared to similar-sized systems it seems important to add some nuance and to say that though the quality was high, that this may also be reasonably expected from green-field, modern-day software development. Especially for very small codebases.

Each of the dots in the graph above signify a system in the SIG benchmark** – and there are more than 5000 of them. The light-blue dots are the systems we saw during Odyssey Momentum.

Two things definitely stand out immediately:

All in all, we should applaud the Odyssey Momentum teams for being able to develop code in line with industry standards, despite working remotely, and in a pressure-cooker hackathon environment, whilst primarily focusing on achieving a functioning minimum viable product to stay ahead of their competition.

It refutes our assumption that quality would suffer under the high pressure of this hackathon. Building quality software and a functional focus can go together. At least for 2 days…

Hidden issues in third party library usage

A big help in software development comes from the ability to use open source software. There are however several caveats here. Using open source libraries comes with its own risks, which teams are expected to solve through having a clear practice around selecting, using, and updating these dependencies. Exactly the sort of thing that one might forget or just skip when developing around the clock during a hackathon.

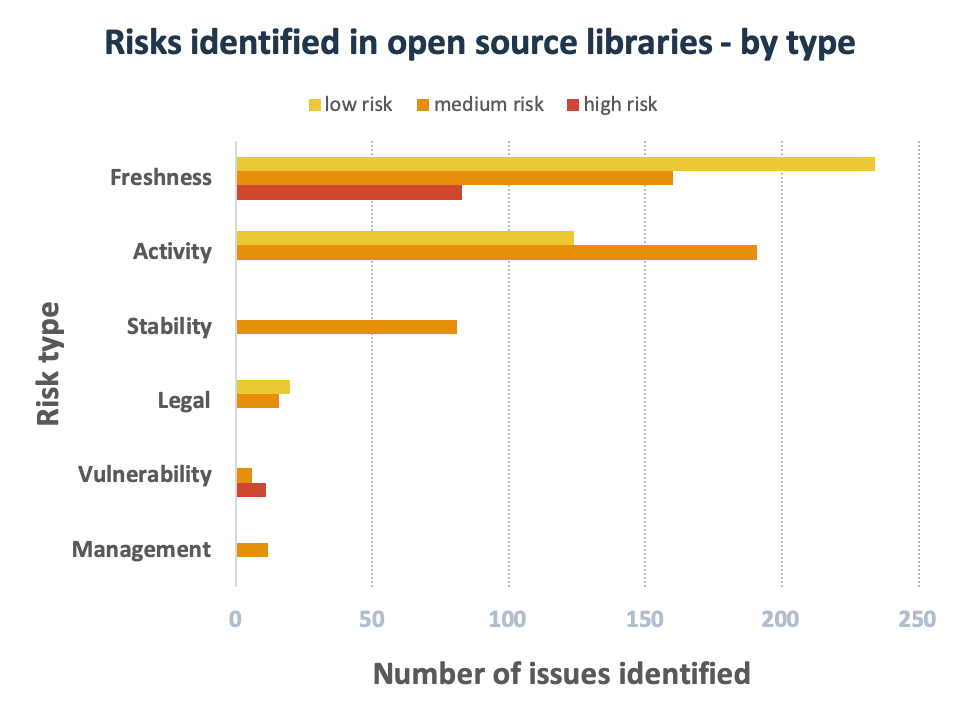

We tracked library usage among the 89 teams hooked onto our platform, to automatically detect risks around various topics. We specifically looked at 1) Freshness: if things are up-to-date, 2) Vulnerabilities: whether there are known security issues, 3) Activity: to check if libraries are actively maintained, 4) Stability: the use of stable versions, 5) Legal: to spot problematic licenses, 6) Management: whether teams use the right practices to configure dependencies. This yielded some interesting results.

In total we identified 844 libraries (out of 1600) with any issue ranging from low to high risk. We’ve noted mainly 3 types of library issues during the hackathon:

These three types of issues may lead to maintenance problems in the future, or reduced ability to react to security deficiencies which are yet to be discovered.

The number of issues around already known security vulnerabilities is limited to 17 of the 844 libraries at risk. Also potential legal impacts were rare (36), nor did we spot that many wrongfully configured dependencies (12). Though the number of vulnerabilities and legal risks is relatively low in absolute sense, the potential business impact of security and legal issues is rather high.

Final thoughts

We were positively surprised to see that Maintainability stayed mostly under control, despite pressure to deliver. The challenge now is to uphold these standards when these codebases truly grow. It’s been interesting to see how just 2 days of coding introduced risks in more than half of open source libraries in use, including a number of security vulnerabilities. As said before, we realise that hackathons are all about quick results. It’s acceptable to take some temporary shortcuts. If work is continued on these products, we hope attention does widen to include technical quality aspects such as these.

We had a great time participating in the hackathon as Code Jedis. The team behind Odyssey Momentum succeeded in creating an awesome digital environment in which we got in touch with many teams throughout the weekend. A unique experience in itself. We would like to thank all the teams we interacted with for the great collaboration.

We hope we got across our message that functionality is only one piece of the software pie. We know we spent our weekend well, towards our shared mission:

Getting software right for a healthier digital world!

* More information on Better Code Hub. During Momentum people had access to our software assurance platform Sigrid, to see the progress of the teams and to compare the quality of their solutions. More information on Sigrid.

** SIG has the largest benchmark in the industry with more than 36 billion lines of code across hundreds of technologies. The expert consultants at SIG use the benchmark to evaluate an organization’s IT assets on maintainability, scalability, reliability, complexity, security, privacy and other mission-critical factors. Download our 2020 Benchmark Report to gain valuable insights about the current state of global software health.

Author:

Consultant

We'll keep you posted on the latest news, events, and publications.