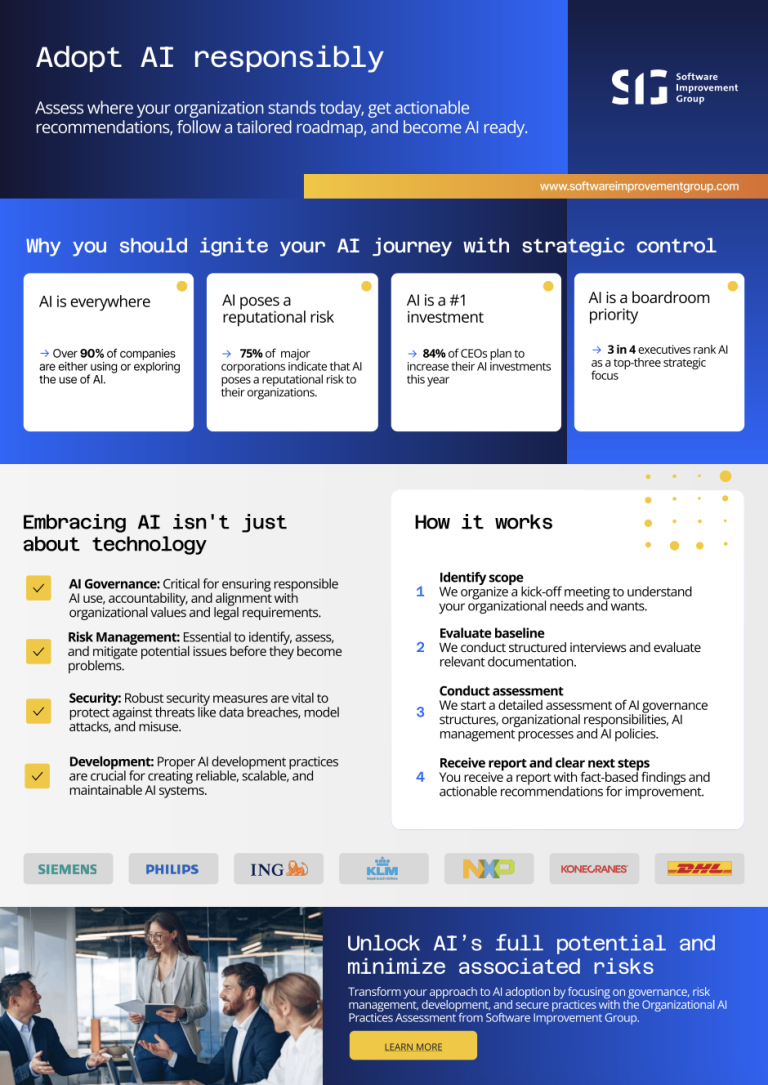

Put the right governance, risk controls, and decision-making structures in place — so you can ignite your AI journey with strategic control.

Effective governance, alignment, and oversight become essential to adopting AI responsibly and at scale.

Without clear governance structures and clarity, enterprises face legal, security, and reputational exposure as AI becomes embedded across systems and processes.

Engineering efforts often evolve independently from board-level expectations, creating gaps in oversight, accountability, and strategic value realization.

Many leadership teams struggle to move from small-scale AI experimentation to coordinated, enterprise-wide adoption due to missing roadmaps, standards, and cross-functional alignment.

With emerging AI regulation and heightened stakeholder expectations, enterprises need robust governance to ensure safe, transparent, and compliant AI deployment.

Strategic clarity for leadership

Ensure responsible AI use, accountability, and alignment with organizational values and legal requirements.

Identify, assess, and mitigate

Become aware of AI initiatives, understand the associated risks, and ensure compliance with relevant regulations.

People, process, and technology

Establish robust security measures and to protect against new AI-specific threats like data breaches, model attacks, and misuse.

Enable teams to scale safely

Support engineering teams with the structures they need. Spot quality, security, and reliability issues before they escalate.

Assess how prepared your organization is to adopt and scale AI safely, efficiently, and in alignment with business goals.

AI readiness resources

AI governance refers to the structures, controls, and decision-making processes that guide how AI is adopted across an organization. It includes risk management, security, ethical considerations, development practices, and alignment with regulatory requirements.

AI is expanding rapidly across organizations, but oversight, risk frameworks, and strategic alignment often lag behind. Executives, CISOs, and IT leaders face increasing pressure to manage legal, security, and reputational risks while enabling AI innovation responsibly.

Your readiness depends on whether you have clear governance structures, cross-functional alignment, secure development practices, and defined responsibilities across teams. Our assessments evaluate your governance, risk management, development practices, and skill gaps to determine where you stand.

Assessments focus on organizational governance, risk management, security controls, ethical AI considerations, engineering practices, cross-functional collaboration, and alignment with global standards such as ISO/IEC 5338 and emerging AI regulations.

Effective AI governance spans the entire enterprise—from the board and executive leadership to IT, security, engineering, data teams, and risk or compliance functions. Strong collaboration across these groups is essential for responsible, scalable adoption.

Emerging AI legislation and ethical expectations require organizations to have transparent processes, strong documentation, and robust accountability. Without these structures, enterprises risk delays, compliance gaps, and reputational harm.

If you have questions that aren’t covered here, feel free to reach out. We’re always happy to help you with more information or clarifications.