AI in Telecom: Why strong foundations decide success in 2025

In this article

Summary

AI is transforming telecom at speed, but long-term success depends on strong software foundations.

This article explores why many AI systems fall short on quality and governance, and why telecom operators must treat AI like core infrastructure to scale safely and sustainably.

You’ll learn:

Why AI systems differ from traditional software and why that matters in telecom

The structural risks hidden in most AI codebases today

How poor engineering and governance practices undermine resilience

What telcos can do to build, govern, and scale AI with confidence

The state of AI adoption in telecom

In 2025, telecom has been betting big on Artificial Intelligence. Research shows that 97% of industry executives are assessing or already adopting AI in their operations. In the last few years, commercial deployment of AI— particularly in generative AI use cases — increased fourfold, underscoring the industry’s full commitment to the AI age.

Optimism is running high, and for good reason. AI is already reshaping productivity, customer support, and network management. Recent surveys show that 65% of operators use generative AI to boost efficiency in coding, knowledge services, and content creation, while 54% have deployed it in customer service. Operators are also positioning themselves as providers, embedding AI into infrastructure and offering AI-powered services to customers.

But this momentum alone can’t guarantee success. AI systems in telecom can be fragile, hard to maintain, poorly tested, and vulnerable to hidden risks. Without disciplined engineering and governance, AI can amplify flaws instead of creating value.

For telecoms, success won’t come from adopting AI the fastest, but from building it on reliable, resilient software foundations.

AI vs traditional software

To understand AI’s full potential (and possible pitfalls) in telecom, we must first define what we mean by ‘AI’, beyond the buzzwords.

AI is not just another software tool; it represents a different engineering model. Traditional software is deterministic: developers write rules, and the program executes them in predictable ways.

AI systems, by contrast, are data-driven. They learn from past inputs, adapt over time, and generate probabilistic outputs such as predictions, classifications, or recommendations. This makes them invaluable in telecom, where networks generate vast and complex datasets. But it also makes them harder to test, govern, and maintain.

Within the AI family, several subfields are particularly relevant:

- Machine Learning (ML): Models trained on data to identify patterns and predict outcomes, such as spotting fraud in telecom transactions.

- Deep Learning (DL): A subset of ML that uses neural networks with many layers, effective for recognizing speech, images, or large-scale traffic patterns.

- Generative AI (GenAI): A further subset of DL designed to create new content. In telecom, this can mean generating synthetic training data or summarizing network logs.

Key distinction: All generative AI is AI, but not all AI is generative AI. For example, a model forecasting network congestion is an AI system but not generative AI; a language model writing customer responses is generative AI.

Standards such as ISO/IEC 5338, co-created by Software Improvement Group (SIG), classify AI systems as distinct from traditional software because they:

- Learn from data rather than follow fixed rules.

- Require retraining as data changes.

- Make probabilistic judgments rather than deterministic outputs.

For telecom operators, this distinction is critical. AI systems underpin network optimization, anomaly detection, and predictive maintenance. Generative AI is emerging in customer-facing and productivity use cases. Both offer significant efficiency gains, but each carries unique risks that demand rigorous governance and disciplined engineering practices. These risks go beyond technical complexity, extending to bias, security vulnerabilities, and transparency—areas that require new governance approaches.

But knowing how AI differs from traditional software is only part of the picture. The deeper question is whether these systems are engineered to last.

Many AI systems aren’t built to last

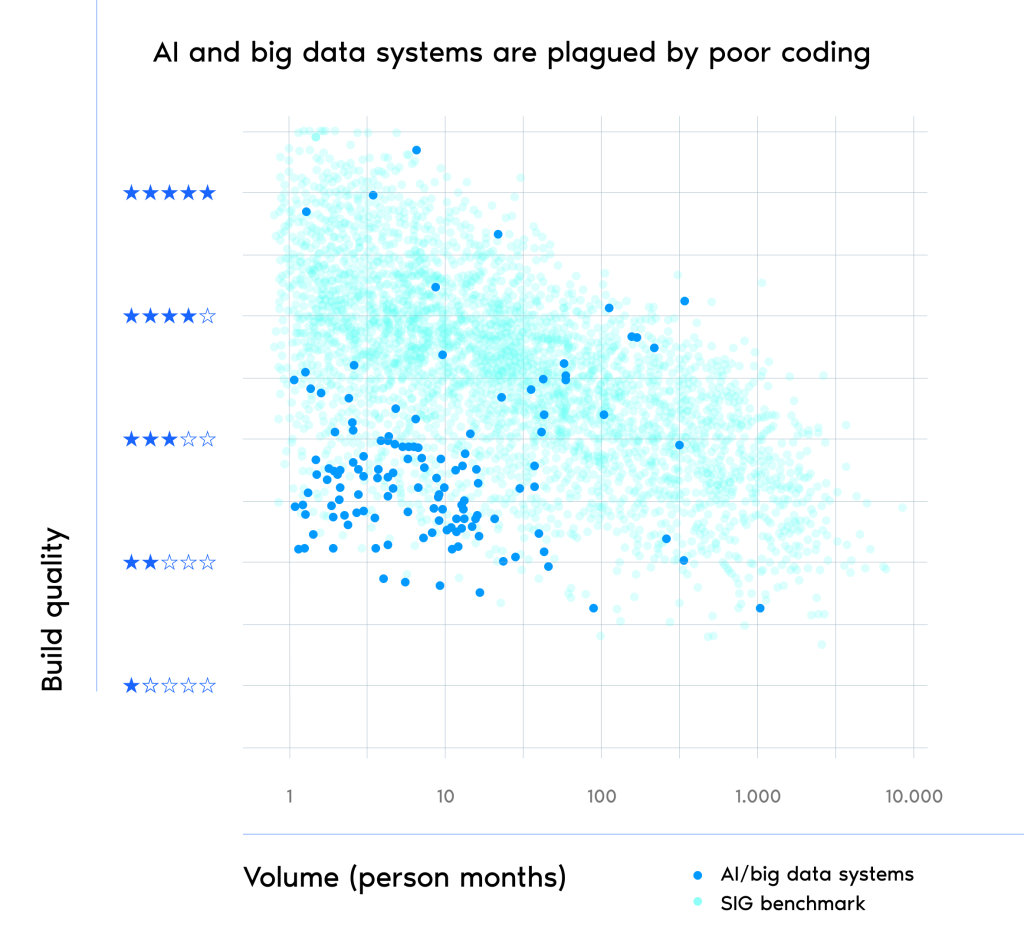

AI systems are only as strong as the software foundations they rely on. SIG’s research shows that most fall short: 73% of AI and big data systems show structural quality issues, scoring below the benchmark average. On average, these systems rate just 2.7 out of 5 stars*—significantly lower than traditional software.

*A note on SIG’s star ratings: SIG’s star ratings are a way to evaluate and compare software quality. The ratings range from 1 to 5 stars, with half-star increments (so technically 0.5 to 5.5). A 3-star rating represents the market average. The ratings are based on comparing a system’s properties to SIG’s benchmark, which includes thousands of systems and is recalibrated yearly.

Our dataset of Al/big data systems was compiled by selecting systems that revolve around statistical analysis or machine learning, based on the technologies used (e.g. R and Tensorflow) and documentation.

The reasons for this can be traced back to engineering practices. Many AI projects are led by data scientists focused on producing insights quickly, not on applying proven software engineering methods. As a result, AI systems often:

- Contain complex, bloated code that is difficult to modify, analyze, or reuse.

- Have almost no test coverage—just 1.5% compared to 43% in traditional software—making errors harder to detect and models riskier to update.

- Suffer from low maintainability and scalability issues.

- Carry security and privacy vulnerabilities.

- Are poorly documented, making knowledge transfer and long-term use difficult.

For telecom operators, these weaknesses are more than technical inconveniences. Networks demand reliability and uptime. When AI systems are built on fragile code, they can undermine resilience instead of strengthening it. In an industry where even small outages have outsized customer impact, deploying AI without strong engineering discipline increases operational and reputational risk.

When AI multiplies problems

The risks of fragile AI don’t stop at poor build quality. Left unchecked, AI has a tendency to amplify existing flaws across an organization’s technology stack. A coding shortcut or an undocumented system may seem minor. But when AI accelerates delivery without strong governance, those weaknesses can quickly cascade.

Industry research reinforces this concern. McKinsey’s latest State of AI report notes that adoption is accelerating. Yet many enterprises still lack effective risk-mitigation practices, leaving them exposed to accuracy lapses, cybersecurity incidents, and IP vulnerabilities.

IBM adds another warning: more autonomous, “agentic” AI systems magnify these risks because increased autonomy often means less transparency and control. Without strong governance, such systems can make decisions that are difficult to detect, explain, or reverse.

Tip: for an in-depth exploration of steps you can take to ensure robust AI governance, check out the full AI readiness guide.

This gap between adoption and oversight is widespread. One 2025 AI Governance Survey found that nearly half of organizations don’t monitor their AI systems for drift, misuse, or inaccuracy—basic guardrails for safe deployment. Many leaders admit that pressure to “move fast” has kept them from implementing the governance structures needed to scale responsibly.

For telecom operators, the implications are serious. Networks are already complex, highly regulated, and mission-critical. When AI is introduced into environments with legacy systems, hidden technical debt, or unclear ownership, it can:

- Automate bad patterns at scale, turning small inefficiencies into systemic fragility.

- Compound security vulnerabilities, as AI-generated code may introduce exploitable flaws.

- Undermine architecture discipline, creating dependencies that are costly to untangle later.

- Erode trust and compliance, particularly where AI outputs touch sensitive customer data or regulated processes.

In short, AI is a force multiplier. If the foundation is strong, it accelerates innovation. If the foundation is weak, it accelerates failure. In telecommunication, where even brief outages or compliance breaches have outsized impact, the stakes couldn’t be higher. These risks, however, are not inevitable.

The path forward: build AI like critical infrastructure

To unlock long-term value, telecoms must treat AI as serious software, not experiments. That requires the same engineering discipline applied to core network systems: architecture, documentation, testing, and governance.

SIG’s research highlights four essential steps:

- Apply software engineering best practices

Modular, maintainable, and well-documented code is the foundation for AI that can evolve over time. This includes security practices, where AI projects often lag behind traditional systems.

- Bridge AI and software engineering teams

Many AI systems are still built in silos by data scientists. Integrating software engineers into AI projects ensures stability and avoids creating technical debt or legacy systems from day one.

- Strengthen AI governance

Clear accountability and governance policies are critical. Telcos must align AI development with standards for risk, compliance, and transparency—just as they do with other mission-critical systems.

- Implement continuous validation

AI systems degrade as data changes. Regular testing and retraining are essential to maintain safe, scalable performance across telecom networks.

Treating AI as a core capability, engineered and governed like critical infrastructure, is the only way to unlock its full potential in telecom. Done right, AI will improve productivity and customer experience, delivering resilience, scalability, and trust at the heart of the network.

Ensure your software is always two steps ahead

Telcos racing to deliver high-speed, AI-powered, and always-on services can’t afford fragile software foundations. From resilience to sustainability, software quality is a strategic multiplier.

Modernize your stack, secure your network, and stay in control.