Management Dashboard

Trusted by 400+

leading enterprises

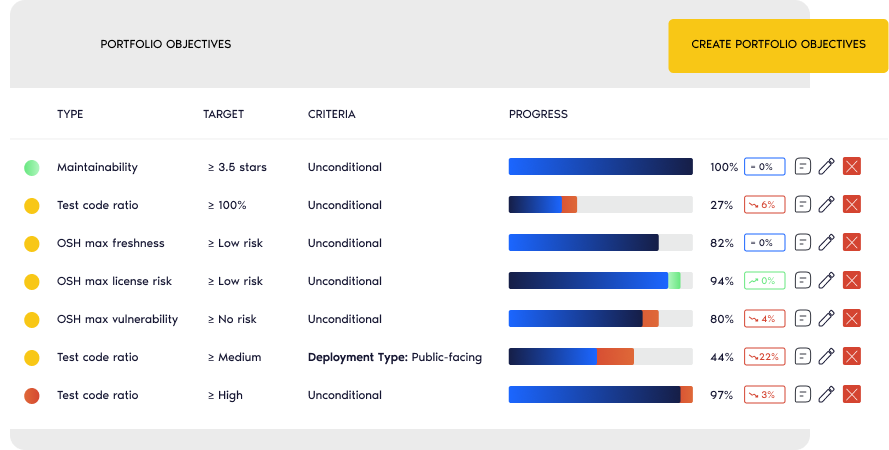

Sigrid® brings clarity to complexity, combining deep code insights with expert guidance to help you govern your software landscape and harness the full power of AI.

Ensure your technology is a driver of success, not a source of risk.

Analyze your source code

Send your source code to Sigrid — our platform benchmarks it against the world’s largest software dataset.

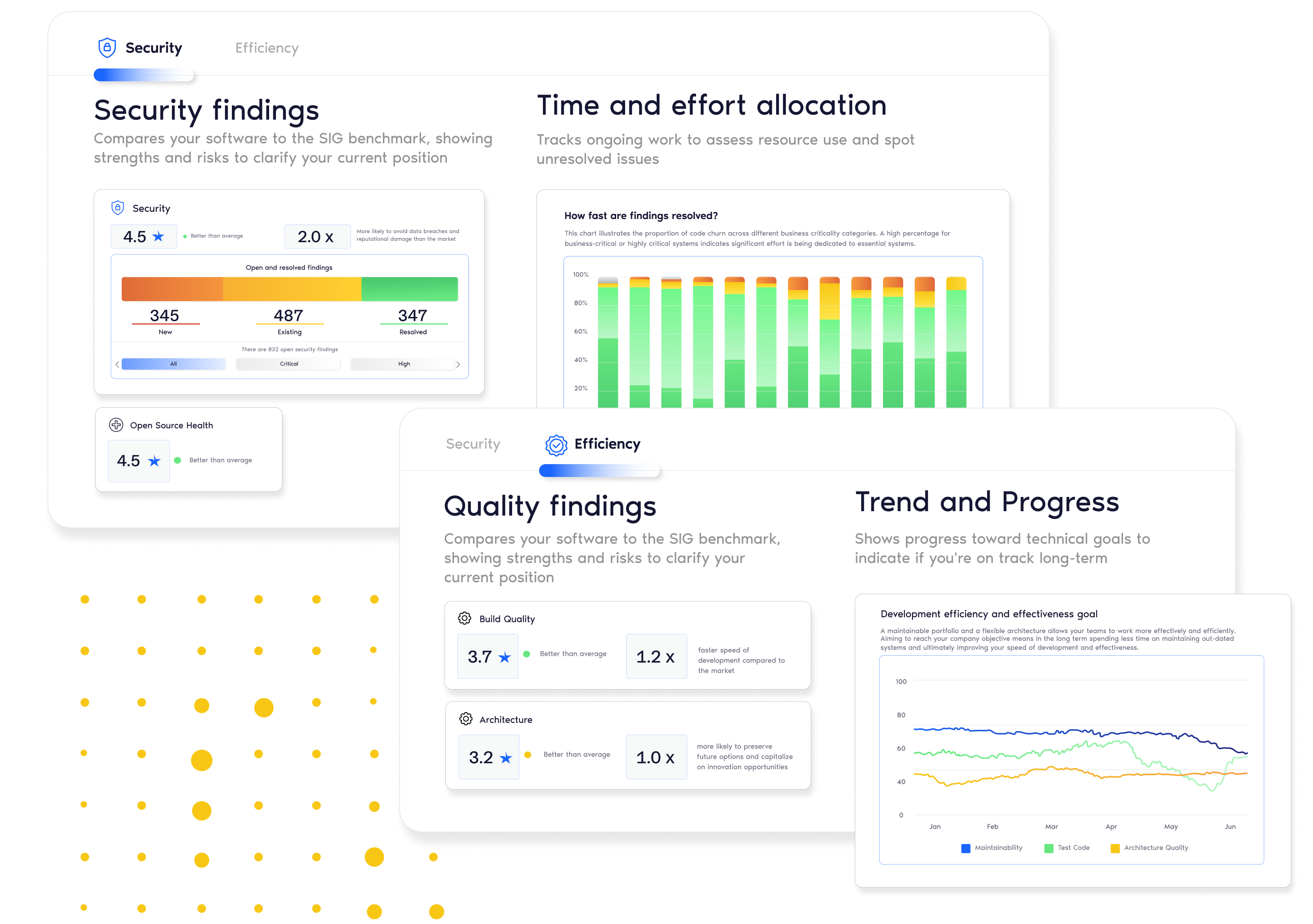

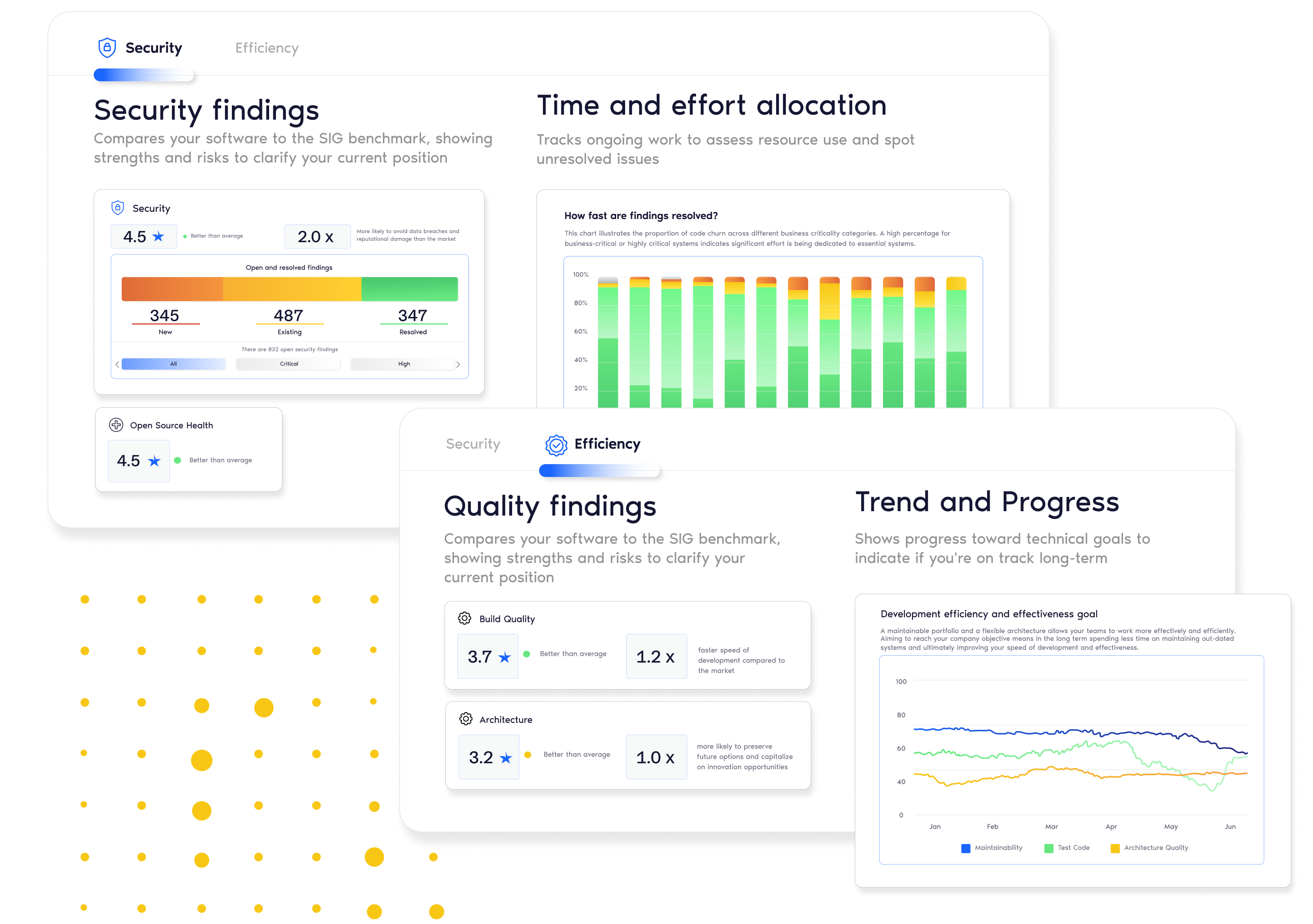

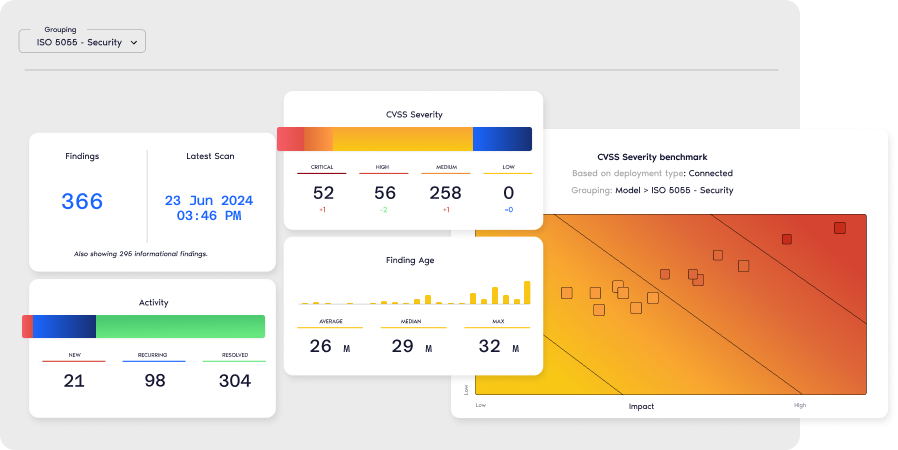

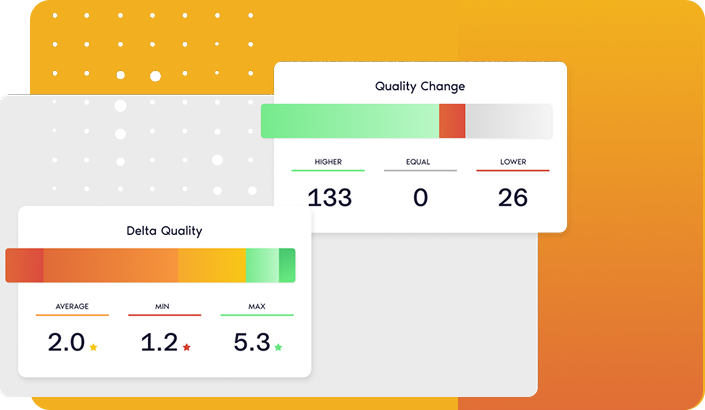

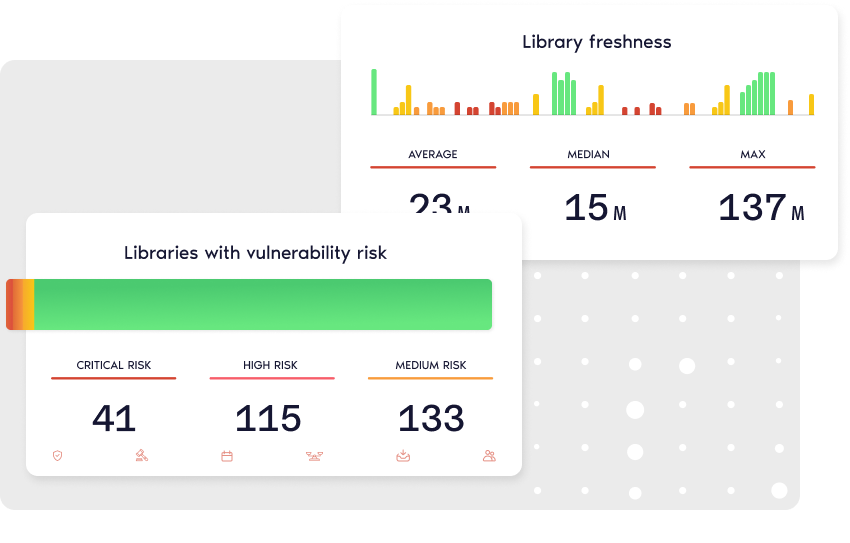

Get your risk profile

Sigrid identifies weak spots and bottlenecks in your code, architecture, and third-party systems.

Act on expert advice

Our consultants help you prioritize fixes and align improvements with business goals.

Keep improving

Sigrid continuously monitors your software to flag risks early and ensure lasting performance.

“Tooling like Sigrid provides transparency, allowing us to manage our software proactively and maintain high standards. This is crucial for securely sharing personal data in our digital processes and staying ahead of potential security risks.”

“With the help of Software Improvement Group, and their platform Sigrid, we can invest more effectively in code quality improvement and development."

Software Improvement Group helps us access and interpret the data so that we can improve things better and more quickly."

“We chose Sigrid to validate the strength of our code base, ensuring our foundations are as robust as we believe. This allowed us to focus our investments on targeted improvements and bolster our security, turning insight into action for a safer, stronger product.”

“We needed an independent partner to help us measure the systems. They can tell us that everything is perfectly fine, but we needed to know for sure. With Sigrid®, we’re getting more guarantees that the software that’s being delivered is up to par.”

Ensure your software is always two steps ahead.

Sigrid® is technology-agnostic and currently has supported over 300 different technologies. Naturally, we support popular languages such as Java, C#, and Python. We also support some more specialized but still widely-used technologies like COBOL, PL/SQL, and Docker.

On average, we were adding about 20 new technologies per year. If there's a specific technology you're curious about, we'd be more than happy to check on our current support level. We're always expanding our capabilities, so even if we don't fully support something today, there's a good chance we could add or improve support if needed for your project.

Generally, to assess the quality of something, you need a sense of context. Each piece of software may have a widely different context. To put objective thresholds on code quality, at SIG we use technology-independent code measurements and compare those to a benchmark.

A benchmark on quality is meaningful because it provides an unbiased norm of how well you are doing.

The context for this benchmark is “the current state of the software development market”.

This means that you can compare your source code to the code that others are maintaining.

To compare different programming technologies with each other, the metrics represent a type of abstractions that occur universally, like the volume of pieces of code and the complexity of decision paths within. In this way, system size can be normalized to “person-months” or “person-years”, which indicate of amount of developer work done per time period. Those numbers are again based on benchmarks.

Summarizing, Sigrid compares analysis results for your system against a benchmark of 30,000+ industry systems. This benchmark set is selected and calibrated (rebalanced) yearly to keep up with the current state of software development. “Balanced” here can be understood as a representative spread of the “system population”. This includes anything in between old and new technologies, from anything legacy to modern JavaScript frameworks. In terms of technologies this is skewed towards programming languages that are now most common, because that best represents this current state. The metrics underlying the benchmark approach a normal distribution. This offers a sanity check of being a fair representation and allows statistical inferences on “the population” of software systems.

The code quality score compared to the benchmark is expressed in a star rating on a scale from 1 to 5 stars. It follows a 5%-30%-30%-30%-5% distribution.

Technically, its metrics range from 0.5 to 5.5 stars.

This is a matter of convention, but it also avoids a “0” rating score because 0 is not a meaningful end on a quality scale. The middle 30% exists between 2.5 and 3.5, with all scores within this range rated as 3 stars, representing the market average.

Even though 50% of systems necessarily score below average (3.0), 35% of systems will score below the 3-star threshold (below 2.5), and 35% will score above the 3-star threshold (above 3.5). To avoid a suggestion of extreme precision, it is helpful to think about these stars as ranges, such that 3.4 star would be considered “within the expected range of market average, on the higher end”. Note that calculation rounding tolerances are always downwards, with a maximum of 2 decimals of precision. So, a score of 1.49 stars will be rounded down to 1 star.

Sigrid CI can be used to publish main branch code to Sigrid for baseline analysis, and to get feedback on pull requests.

Integrating Sigrid into your development environment serves two purposes. First, it will publish your systems to Sigrid after every change, ensuring Sigrid is always-up-to-date and removing the need for manual uploads. Second, it allows you to provide your development teams with direct feedback after each change. Sigrid supports integrations with 10+ different development environments, such as GitHub or BitBucket.

Sigrid uses your anonymized repository history to calculate metrics on which code has been changed, and when those changes were made. These statistics do not contain personal information. In fact, if you use Sigrid CI, the developer names will be anonymized client-side, so before anything is published to Sigrid.

You can find more information on Sigrid data usage in our Privacy Statement.