The history of AI: From Alan Turing to today’s resurgence

In this article

Artificial Intelligence has been around for decades

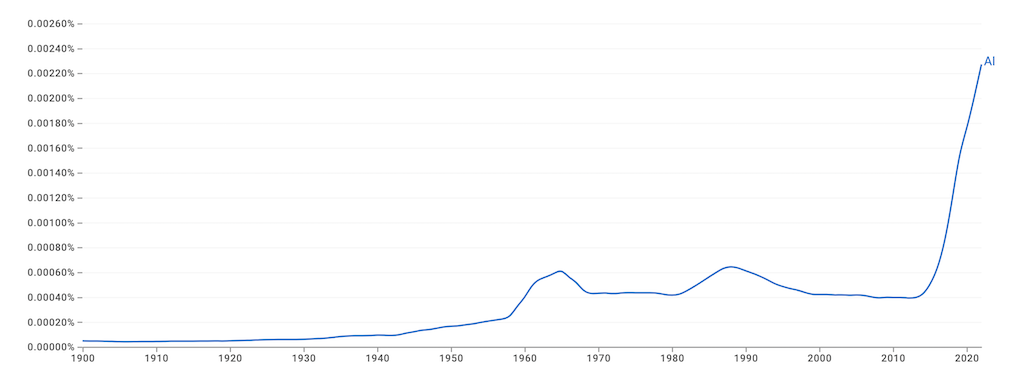

Artificial Intelligence (AI) is the buzzword of our time and the fastest adopted business technology in history. With businesses everywhere scrambling to implement it, it seems like the next big thing. But AI’s history actually stretches back way further than you might think–just take a look at how interest has peaked in literature over the years.

To truly understand where AI is headed, we need to first look back to where it all began. In this blog post, we’ll walk through the history of AI and explore how it has evolved into the technology transforming the world and businesses today.

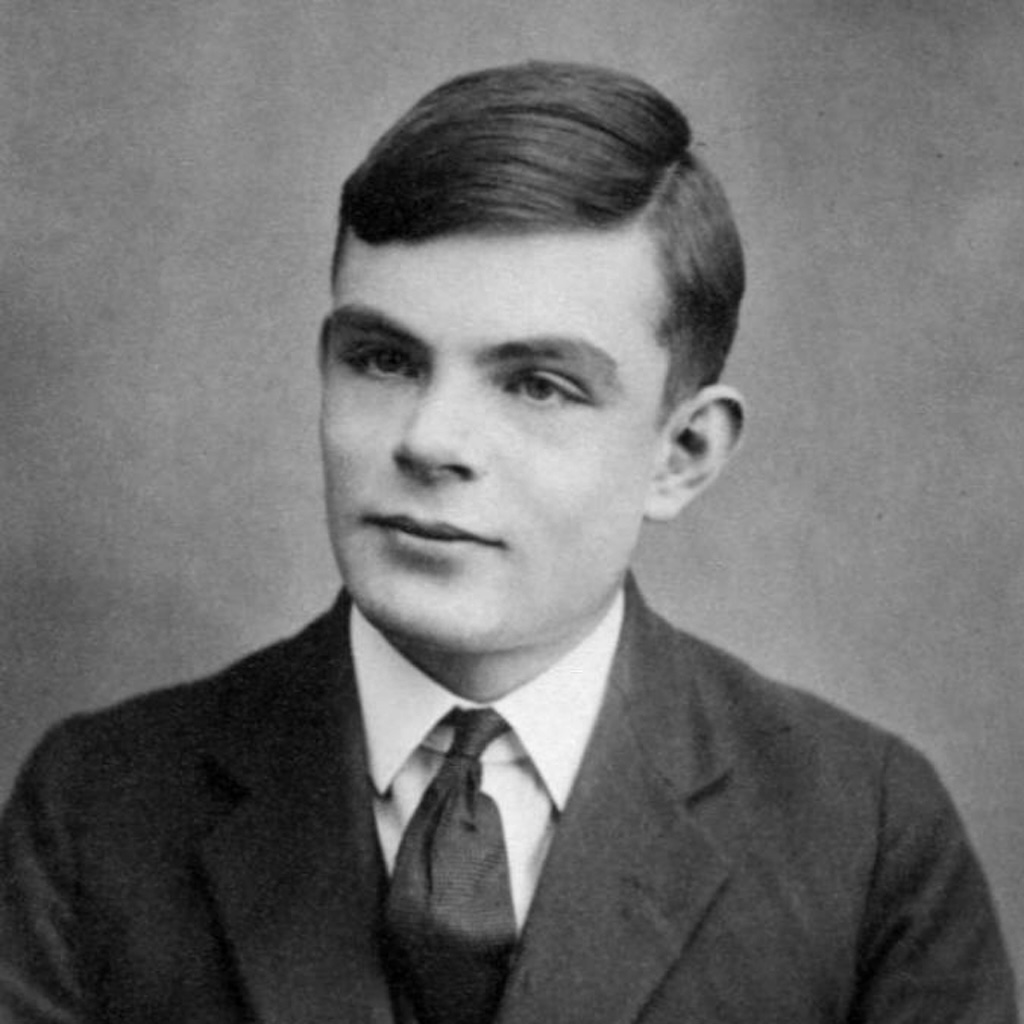

Alan Turing, the father of AI (1950s)

When we talk about AI’s origins, we start with Alan Turing. Known as the “father of AI,” Turing was way ahead of his time, and in the mid 1900s, he formalized the concept of AI.

As Dr. Lars Ruddigkeit from Microsoft noted during our recent SCOPE 2024 conference, while recent advancements like ChatGPT might make AI appear new, they’re actually built on a long history of research.

“In 2022, ChatGPT really scrambled our beliefs, but it was not something that started five years ago.” Ruddigkeit explained. “The field was founded by Alan Turing, and his first publication was in 1950. We’ve been building on his research for decades to reach the breakthroughs we see today.”

The Turing Test

Turing was a mathematician best known for the Turing Test: a way of measuring whether a machine can think.

Originally called the Imitation Game, it was a test of a machine’s ability to convincingly mimic human intelligence. Turing argued that if a human can’t tell whether they’re talking to a machine or a person, and it turns out to be a machine, then it should be regarded as intelligent

Cybernetics and the first algorithms

Turing’s work on AI was deeply influenced by cybernetics, the study of communication and control in machines and living organisms. This field played a major role in the development of the Turing Test, as it provides a framework for understanding how machines could be programmed to imitate human behavior.

The Turing Test continues to be a benchmark for measuring progress in AI and machine learning. The rise of large language models has reignited discussions (and mixed opinions) about whether or not the components of the Turing Test have been met.

Turing’s Influence on AI

Turing’s influence stretches far beyond his time. His ideas were the foundation of AI as we know it today. His belief in machines that could think like humans planted the seed for many AI advancements that are reshaping the world today.

The rise of symbolic AI and early hype (1960s-1970s)

Fast forward to the 1960s and 1970s, and optimism around AI was at an all-time high.

The Dartmouth Summer Research Project in 1956 officially coined the term “Artificial Intelligence” and this sparked excitement. Researchers believed that human-level intelligence was just around the corner.

This period, often referred to as the rise of Symbolic AI, focused on systems that used symbols and rules to represent human knowledge, aiming to mimic human reasoning and problem-solving.

And while there were, no doubt, some promising breakthroughs, this era was also marked by overhyping of early AI research–particularly around machine translation and neural networks. Over promises from developers, extensive media promotion, and unrealistic expectations from the public set the stage for the disappointments to come.

AI Winter: Setbacks and realism (1970s-1980s)

As hype gave way to reality, AI faced a significant setback in the 1970s and 1980s, a period commonly referred to as the first AI Winter. The term was coined in 1984 at the annual meeting of the American Association of Artificial Intelligence (AAAI) after years of unmet promises.

Developers struggled to meet the sky-high expectations set by early optimism, and the public’s excitement faded. Funding dried up, and progress slowed significantly.

This was a period of reflection, where developers realized they had overestimated the technology’s capabilities and underestimated the complexity of creating true artificial intelligence.

Neural networks and machine learning’s resurgence (1980s-1990s)

By the late 1980s and into the 1990s, AI experienced a revival, largely driven by breakthroughs in neural networks and machine learning.

Researchers realized that AI systems could be enhanced using methods rooted in mathematics and economics, such as game theory, stochastic modeling, and operations management, among others.

Techniques like genetic algorithms and deep learning started to mature, allowing AI systems to make better predictions, optimize processes, and solve complex problems more effectively.

This resurgence led to the development of new AI products and technologies, ushering in a new era of commercial AI applications that started to show real promise.

The rise of big data and AI’s modern renaissance (2000s-present)

In the 2000s, big data and cloud computing transformed AI yet again. With massive amounts of data available and the computational power to process it, AI’s capabilities exploded.

Machine learning, a subset of AI that enables systems to learn and improve from data, became the driving force behind countless innovations.

AI milestones from the last decade

Take Google’s self-driving car project from 2009, now known as Waymo, which marked the beginning of AI-powered vehicles capable of driving themselves without human intervention.

Or Siri, Apple’s AI-powered virtual assistant from 2011, which became a key innovation, making voice-activated AI a part of everyday life.

Then there’s the Face2Face program from 2016, which enables users to create realistic deepfake videos, raising serious ethical concerns about AI’s ability to manipulate visual content.

Current landscape and the role of AI in business

Today, around 77% of companies are using or exploring some form of AI in their business. AI is transforming industries, creating new opportunities, but also bringing about challenges.

Issues like bias, data privacy, and cybersecurity risks are top concerns. In fact, 75% of Chief Risk Officers now view AI as a reputational risk, with particular worries around generative AI’s potential to spread misinformation and reinforce bias.

With regulations like the EU’s AI Act on the horizon, businesses must also be prepared to navigate new compliance hurdles to ensure safe and transparent AI systems.

Learning from the past to shape the future

AI’s evolution—from Alan Turing’s early work to today’s resurgence—shows the importance of learning from both its successes and challenges. As we move forward, it’s crucial that businesses approach AI with a strong focus on governance, risk management, and security.

This is where understanding AI readiness comes in. Our AI readiness guide offers practical steps for board members, executives, and IT leaders on how to implement AI responsibly, ensuring they’re prepared to harness AI’s power while mitigating its risks.

The AI hype may be exciting, but staying on the right track requires thoughtful planning and preparation.