Artificial intelligence in business: The essential toolkit for your executive program

In this article

Shocking stats related to artificial intelligence in business

The Artificial intelligence (AI) landscape has shifted from hype to reality. What was once considered futuristic or experimental is now delivering tangible, game-changing results across industries.

This toolkit, which is based on our AI readiness guide, is designed to help business leaders understand how to implement AI in business. We hope that you will better understand the role of artificial intelligence in business as we demystify the technology but also learn how to integrate AI into your business and into your existing frameworks.

Today, 77% of businesses are exploring or actively implementing artificial intelligence, and by 2025, AI capabilities won’t just be a competitive advantage—they’ll be essential to staying relevant in industries like marketing tech, HR tech, and customer support.

For certain industries, such as government or manufacturing, there might still be time to observe and adapt. But in fast-moving sectors, the window for “waiting and seeing” is closing quickly.

And when you begin to act, please don’t make the mistake of treating artificial intelligence in isolation—successful AI adoption is about building on your existing frameworks, expertise, and governance to integrate AI effectively and responsibly.

And this isn’t something that can be built overnight.

The risks of being late to AI adoption are becoming increasingly clear. Studies reveal that 61% of businesses lack internal AI guidelines and our research shows that nearly 75% of all AI systems face severe quality issues that can lead to all kinds of disasters.

Without a clear strategy, companies risk falling behind competitors, facing compliance challenges, or suffering from poor implementation.

Whether you’re just getting started or aiming to sharpen your strategy, this guide will equip you with the insights needed to turn artificial intelligence into a powerful strategic business advantage.

So, let’s dive in.

1. Why is AI considered important for business leaders?

Before diving into how to implement artificial intelligence, it’s important to get your head around some useful basics. It’s clear many business leaders still aren’t at all prepared for the changes to come.

A recent Gartner report reveals that while 60% of CIOs include AI in their innovation strategies, less than half are confident in their ability to manage its associated risks. Similarly, the World Economic Forum highlights that 75% of Chief Risk Officers (CROs) from major corporations express concerns that the use of AI could damage their organization’s reputation

A recent SAP survey revealed that 58% of CFOs admit they don’t fully understand AI.

What is artificial intelligence?

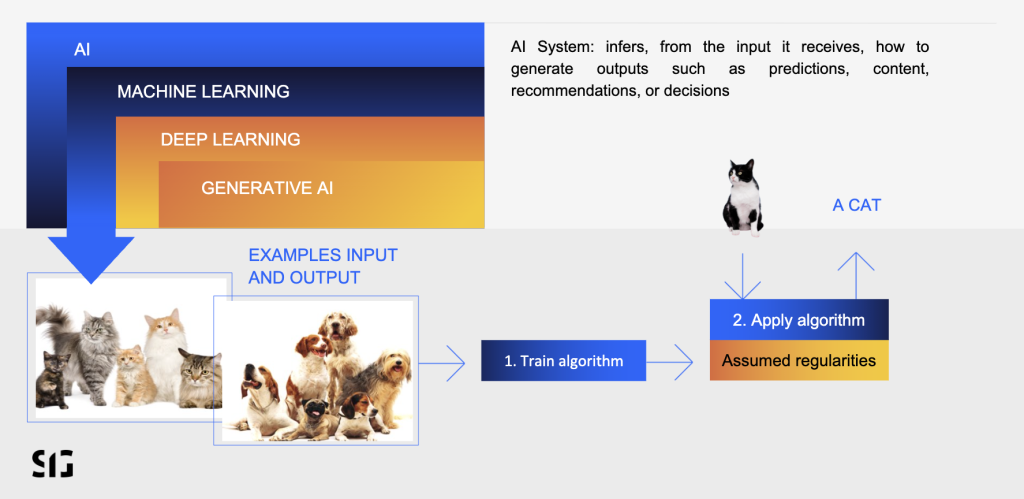

Artificial intelligence refers to systems that analyze input data and generate outputs—such as predictions, recommendations, decisions, or even creative content. Unlike traditional software, which follows predefined rules, AI models are designed to learn and adapt, allowing them to perform tasks autonomously.

At our recent IT leadership event, SCOPE 2024, Rob van der Veer explained AI concepts in simple terms.

In his keynote, he also addressed that there are multiple subsets of AI, let’s take a closer look at what that means.

Subsets of AI

- Machine learning: Enables systems to learn from data, identifying patterns and making predictions or decisions. For example, it powers personalized recommendations like those you see when shopping on Amazon.

- Deep learning: A more specialized subset of machine learning, using neural networks to process large, complex datasets. Think Apple Face ID or Spotify’s personalized song recommendations.

- Generative AI: A revolutionary advancement in AI, capable of creating text, code, and visuals. For example, tools like ChatGPT and DALL-E.

How does AI work?

Artificial intelligence relies on four core elements to function effectively:

- Data: High-quality, diverse datasets are essential for training AI models.

- Machine learning algorithms: These allow AI to learn and identify patterns from the data.

- Computing power: To process and analyze data at scale.

- Human expertise: Guides the development, training, and ethical use of AI.

The training process involves collecting data, feeding it into algorithms, and iteratively refining the model for accuracy. While Artificial intelligence excels in automation and data analysis, it also has limitations, such as lacking human intuition and creativity.

Looking for more on AI basics? Explore our detailed article tailored for non-technical business leaders here.

Now that we’ve got the basics under control, let’s dive into some of the benefits and risks of AI.

2. Advantages of artificial intelligence in business

AI is no longer a futuristic concept—it’s a core part of today’s business landscape. By 2026, Gartner predicts over 80% of enterprises are expected to use generative AI tools, and 75% of new enterprise applications will incorporate AI or machine learning models. From healthcare to logistics, organizations are leveraging AI to optimize operations and remain competitive.

How is artificial intelligence used in business?

- Healthcare: AI-powered analytics enable faster diagnoses and personalized treatment plans, as seen in AtlantiCare’s AI-driven lung cancer detection tools.

- Finance: Tools like Google Cloud’s anomaly detection tools can analyze transaction patterns to combat financial crimes and cyber threats.

- Logistics: AI improves supply chain management, forecasts demand and optimizes delivery routes using real-time data.

3. The risks of AI

Despite its potential, Artificial intelligence presents significant risks that can threaten businesses if not properly managed.

Key concerns include:

- Reputational damage: Misuse or failures in AI systems can harm a company’s image, especially with generative AI potentially spreading misinformation.

- Security risks of AI: AI systems are vulnerable to attacks and misuse, raising concerns about data protection and confidentiality.

- Bias and inaccuracy: AI is only as reliable as the data it’s trained on. Biased or flawed datasets can lead to unfair decisions or inaccurate results.

- Accountability challenges: With many AI systems operating as “black boxes,” it’s often unclear who is responsible when errors occur.

- Intellectual property issues: AI’s reliance on data raises questions about copyright and ownership, as seen in recent lawsuits involving AI-generated content.

Practical steps for responsible use of artificial intelligence in business

To tap into AI’s potential while minimizing risks, organizations need to ensure they are AI ready. We’ve written a guide that features 19 practical steps to help organizations reap the benefits of artificial intelligence in business.

Key recommendations include:

- Establish accountability: Implement AI management systems to oversee applications from ethical, legal, and operational perspectives.

- Apply software best practices: Treat AI as a software system and prioritize areas like security and oversight.

- Mitigate cyber risks: Enforce a zero-trust approach, limit data collection, and build fail-safes to protect against AI failures.

4. AI regulation

As AI adoption surges and risks escalate, governments around the world are racing to implement regulatory frameworks to govern its development and usage. Let’s take a closer look at key approaches across the EU, the US, and beyond.

EU AI Act

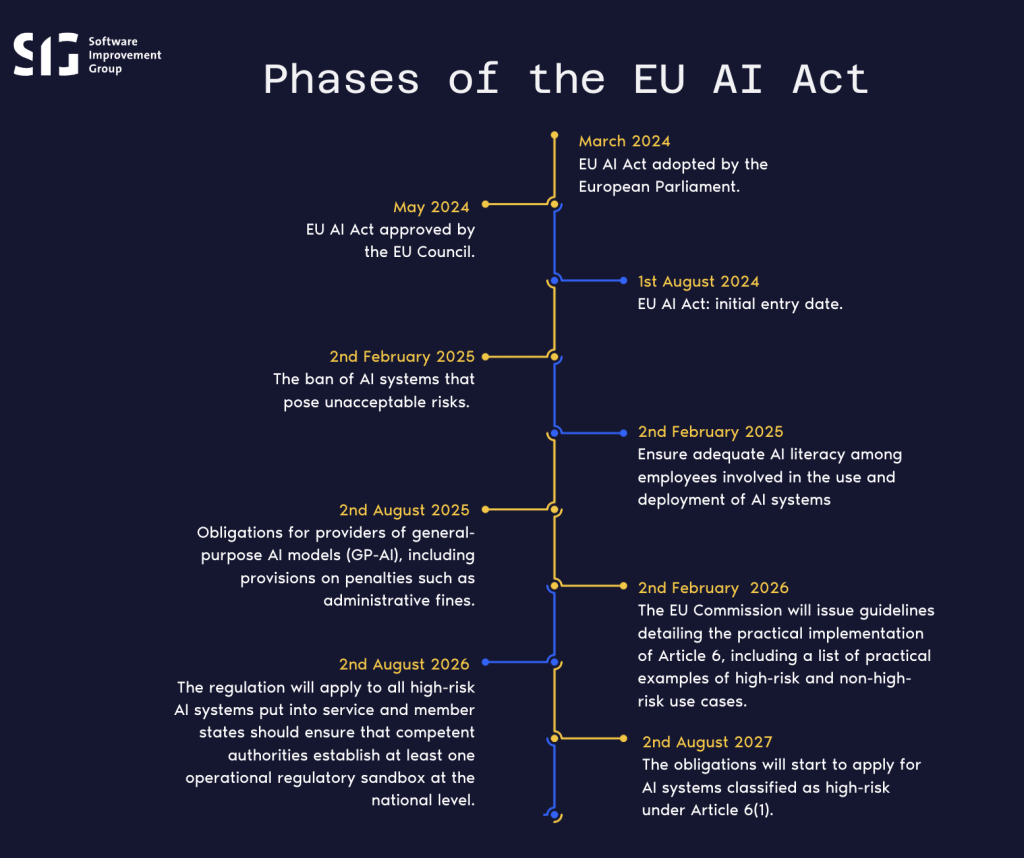

Adopted in March 2024, the EU AI Act is the first comprehensive legislation aimed at regulating AI across industries. It sets a global benchmark for AI governance, emphasizing accountability, safety, and the protection of human rights.

EU AI Act's risk categories

The Act classifies AI systems into four risk categories—unacceptable, high, limited, and minimal—each with corresponding obligations to ensure safety, transparency, and ethical use.

- Unacceptable-risk AI systems: These are AI systems that pose a significant threat to fundamental rights, safety, or societal well-being. These are banned outright, with very few exceptions. Examples include social scoring systems or real-time facial recognition without legal approval.

- High-risk AI systems: These are AI systems that have the potential to impact safety, rights, or critical decision making. They are permitted but require strict risk assessments, EU database registration, and ongoing compliance monitoring. Examples include AI systems used for diagnosing diseases, assessing student performance, crime prediction, or managing power grids.

- Limited-risk AI systems: These are AI systems that pose lower risks but still require some level of transparency and accountability. For example, generative AI tools like ChatGPT and DALL-E must disclose when content is AI-generated and prevent illegal use.

- Minimal-risk AI systems: These are AI systems that are widely used in everyday applications and do not pose much risk. Examples include spam filters and AI-enabled video games.

EU AI Act timeline

The EU AI Act will be phased in gradually, with obligations increasing every six to 12 months. Businesses operating in the EU may be required to comply with some parts of the Act as early as February 2025.

For the most up-to-date information, visit the European Parliament’s official website.

AI literacy

Starting February 2, 2025, all EU organizations must ensure employees involved in AI use and deployment have adequate training. This requirement applies to both AI system providers and users, helping to prevent misuse and ensure compliance.

Want to learn more about the EU AI Act? Check out our full breakdown here.

US AI regulation

Unlike the EU’s unified framework, the US adopts a fragmented approach to AI regulation, with initiatives at both federal and state levels.

Current overview of AI regulation in the US

Federal AI legislation

- The National Artificial Intelligence Initiative Act of 2020 (NAII) focuses on AI research and development, rather than regulation. It promotes US leadership in AI innovation through federal agency collaboration.

- The Blueprint for an AI Bill of Rights (2022) is a voluntary framework guiding federal agencies and other entities on AI governance. Its principles include ensuring safe systems, preventing algorithmic bias, protecting user privacy, and offering transparency and opt-out options.

State-level legislation

Several states have introduced AI-related laws to provide more structured governance.

- The Colorado AI Act, for example, mirrors elements of the EU AI Act, targeting high-risk AI systems with requirements for transparency, risk assessments, and compliance frameworks.

While the US lacks a comprehensive federal AI law, businesses must navigate this patchwork of regulations to avoid nasty penalties for non-compliance and ensure ethical AI deployment.

Read more about federal and state regulations here.

Global trends in AI regulation

AI regulation is quickly becoming a global priority. Stanford University reported 127 countries with AI-related laws in 2023, up from just 25 in 2022.

Many countries are adopting the OECD AI Principles, promoting transparency, accountability, and human-centric AI. Organizations like the United Nations and G7 are also encouraging multilateral cooperation to balance AI’s risks and benefits.

Common regulatory themes

- Human agency and oversight: Ensuring AI systems remain under human control.

- Transparency: Mandating clear communication about AI’s operations and decisions.

- Ethical use: Preventing discrimination, ensuring privacy, and addressing environmental impacts.

Find out more about international AI regulation trends here.

5. ISO standards for artificial intelligence

As businesses increasingly adopt AI, the importance of standardized practices for its safe and effective implementation cannot be overstated. The International Organization for Standardization (ISO) has developed key standards that provide a robust framework for AI lifecycle management, data security, and governance.

Why ISO standards matter in AI governance

The ISO collaborates with global experts to establish best practices for various industries. In the realm of AI, ISO standards play a critical role in ensuring:

- Safety and security: Protecting data and preventing misuse.

- Risk mitigation: Addressing challenges like bias, transparency, and ethical considerations.

- Operational efficiency: Streamlining AI adoption while maintaining quality and accountability.

Adopting ISO standards for Artificial intelligence not only ensures regulatory compliance but also strengthens trust, enhances credibility, and gives you a competitive advantage in the market.

Find out more about international AI regulation trends here.

Key ISO standards for Artificial intelligence

1. ISO/IEC 27001: Safeguarding information security

ISO/IEC 27001 emphasizes a holistic approach to managing information security through the CIA Triad:

- Confidentiality: Ensuring only authorized access to sensitive data.

- Information integrity: Maintaining reliable and safe data storage and backup.

- Availability of data: Ensuring timely and reliable access to information.

It helps businesses enhance their cybersecurity measures to protect AI training data and operational systems. It can also improve resilience against cyber threats and data breaches.

2. ISO/IEC 31700: Privacy by design

ISO/IEC 31700 establishes “privacy by design” as a core principle throughout the AI lifecycle, ensuring data privacy is prioritized from inception to deployment.

It can help businesses comply with privacy regulations such as GDPR, reduce risks associated with data misuse and breaches, and encourage innovation and agility in AI development.

3. ISO/IEC 5338: AI lifecycle management

Co-developed by Software Improvement Group, ISO/IEC 5338 extends traditional software lifecycle best practices to AI systems. It focuses on:

Protecting sensitive training data.

Addressing risks like transparency, bias, and purpose limitations.

Continuous validation of AI models to prevent performance degradation.

It offers businesses clear guidance on managing AI risks throughout the system’s lifecycle, while enhancing quality assurance, project management, and operational oversight.

4. ISO/IEC 42001: Artificial Intelligence Management Systems (AIMS)

ISO/IEC 42001 is the first international standard dedicated to establishing and improving an Artificial Intelligence Management System (AIMS) within organizations. It focuses on:

Ethical considerations and transparency in AI development.

Balancing innovation with governance.

It offers businesses a structured approach to managing AI risks and opportunities and future-proof strategies for continuous improvement in AI systems.

Why businesses should adopt ISO standards for AI

While ISO compliance is voluntary, aligning with these standards prepares organizations for evolving regulations and industry best practices. By doing so, businesses can mitigate risks, build stakeholder trust and explore AI opportunities responsibly to drive innovation.

Interested in integrating ISO standards into your organization? Learn more here.

6. How to implement AI in business: Build on existing frameworks

Integrating AI into your organization doesn’t have to mean starting from scratch. In fact, the most effective way to adopt AI is by building on your existing governance, risk, compliance, and security frameworks.

Extend, don’t replace

Many organizations mistakenly think they need entirely new processes for AI, leading to inefficiencies and silos. Instead, we recommend treating AI as an extension of your current software landscape. By building on what’s already in place, you can streamline your AI adoption while maintaining efficiency and compliance.

As our very own AI expert, Rob van der Veer, explained at our recent SCOPE 2024 event, “We’re seeing AI in isolation too much. Treat production AI for what it is: professional software.” This simple advice can make AI implementation a whole lot less daunting.

Key practices for an optimized Artificial intelligence integration

- Treat AI as software. This means integrating AI engineering activities into your regular software engineering program and building upon existing practices.

- Adopt ISO/IEC 5338, the new industry standard for AI lifecycle management, to help you manage AI systems alongside traditional software.

- Implement documentation best practices. Just like traditional software development, proper documentation is crucial for AI systems. Integrate AI in your existing version control, documentation, reporting, and auditing processes.

- Avoid silos. Incorporate AI models into your existing IT systems to prevent duplication and inefficiencies.

- Encourage cross-team collaboration: Get AI engineers and traditional software developers working together to ensure AI is integrated into the overall business strategy.

- Tweak traditional security controls to cover AI’s special security needs. Things like data segregation, input and model validation, and following standards like ISO/IEC 42001 can help you to effectively manage AI’s risks.

- Familiarize yourself with AI-specific risks like deepfakes and data poisoning. By integrating AI risks into existing risk registers, you can create a more unified approach that aligns with your company’s wider risk management strategies.

Learn more about how your business can successfully adopt AI by extending your existing frameworks here.

How to prepare for artificial intelligence in your business

AI is no longer just a buzzword. It’s here to stay, reshaping industries and changing the ways businesses operate. For business leaders, the question is no longer “if” but “how” to adopt AI responsibly and strategically to stay competitive.

And we understand that it can sound daunting. However, it doesn’t need to.

To prepare your organization for artificial intelligence, we’ve developed an AI readiness guide authored by Rob van der Veer, Senior Principal Expert in AI at Software Improvement Group (SIG).

This step-by-step resource provides actionable advice across four critical areas:

The Board

A recent McKinsey study revealed that 91% of businesses feel unprepared to implement AI responsibly. As a board member, you hold a unique position to guide your organization through this transformative era. But with this comes the critical responsibility of ensuring AI is used ethically, securely, and in compliance with evolving regulations.

Recently, our Senior Principal Expert, Rob van der Veer, was invited to the Progression to Analog podcast, hosted by Caitlin Begg, to discuss AI readiness.

You can listen to the full podcast either on Apple or Spotify.

Governance, Risk, and Compliance (GRC)

With a recent McKinsey study estimating AI models could automate 60-70% of employees’ tasks, there’s no doubting AI’s potential to revolutionize efficiency, productivity, and innovation. But it also introduces serious risks, including:

- Bias and discrimination due to flawed training data.

- Reputational damage from non-transparent or unsafe AI.

- Regulatory fines for non-compliance with laws like the EU AI Act.

- Cybersecurity threats and data breaches tied to AI misuse.

As AI weaves its way into business operations, managing its governance, risk, and compliance (GRC) is critical. Proper governance can help to mitigate risks while unlocking opportunities such as enhanced decision-making, reduced costs, and improved brand trust.

Security (CISO)

With 83% of companies making AI a top priority in their business strategies, there’s no doubt about its potential to transform businesses. But with this rapid adoption comes unique security risks. As a Chief Information Security Officer (CISO), your role is crucial in safeguarding AI systems while integrating their security into your existing security management processes.

IT – including development (CTO)

As promising as AI is, it’s no silver bullet for solving business challenges. Without proper integration, AI risks becoming yet another confusing, disconnected initiative.

As a Chief Technology Officer (CTO), it’s your responsibility to ensure AI aligns with your business’s strategic goals and is integrated smoothly to avoid potential risks.