24 July 2024

Request your demo of the Sigrid® | Software Assurance Platform:

4 minutes read

Written by: Rob van der Veer, Asma Oualmakran

Artificial Intelligence (AI) is on the rise. However, many AI/big data systems face serious quality issues, especially in maintainability and testability. In this article, Rob van der Veer and Asma Oualmakran highlight these typical AI/big data system quality issues, analyze underlying causes, and offer recommendations to prevent a major AI crisis.

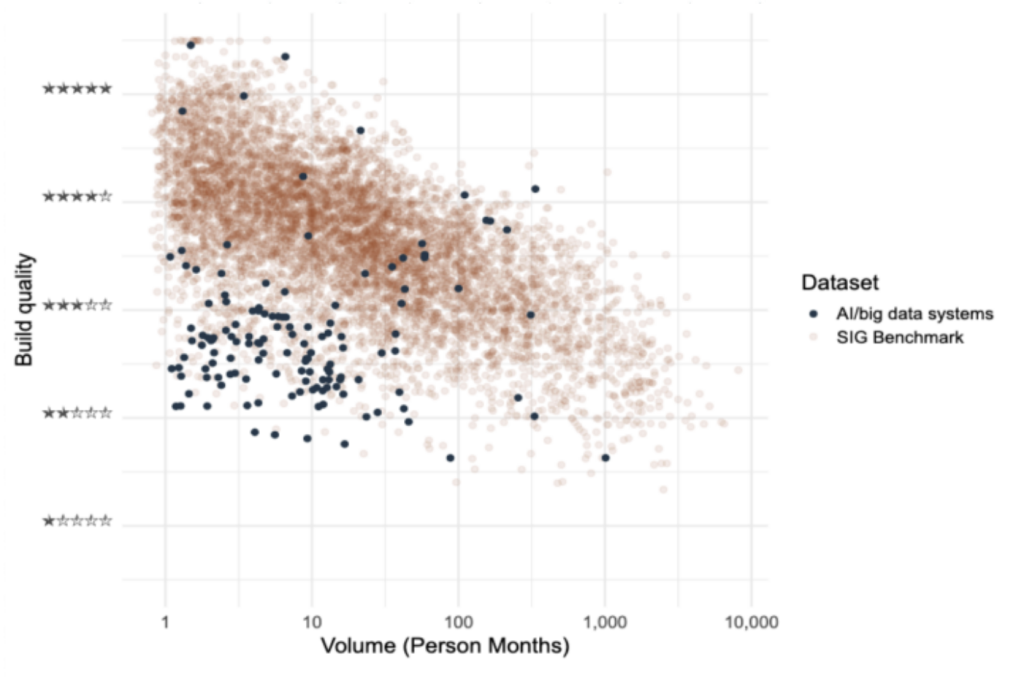

The SIG benchmark is a database containing source code from thousands of software systems from Open Source and SIG customers. It reveals that AI/big data systems are significantly less maintainable than other systems: 73% of AI/big data systems score below average in the SIG benchmark.

© SIG – Sigrid Platform Benchmark

Figure 1: Our selection of AI/big data systems from the SIG benchmark was compiled by choosing systems focused on statistical analysis or machine learning. In this graph, the X-axis represents the system size measured in months of programming work, and the Y-axis shows the maintainability measured on a scale from 1 to 5 stars. 3-stars are average.

The main reason for these maintainability issues is the quality attributes ‘Unit Size’ and ‘Unit Complexity’: many long, complex code segments. Fortunately, there are also AI/big data systems with high maintainability, as shown by the benchmark. This proves that building maintainable AI/big data systems is indeed possible.

Long and complex code segments are hard to analyze, modify, reuse, and test. The longer a piece of code, the more responsibilities it encompasses, and the more complex and numerous the decision paths become. This makes it infeasible to create tests that cover everything, which is demonstrated by the dramatically small amount of test code. In a typical AI/big data system, only 2% of the code is test code, compared to 43% in the benchmark.

Low maintainability makes it increasingly costly to implement changes, and the risk of introducing errors grows without proper means to detect these errors. Over time, as data and requirements change, adjustments are typically ‘patched on’ rather than properly integrated, making things even more complicated. Furthermore, transferring to another team becomes less feasible. In other words, typical AI/big data code tends to become a burden.

Why do long and complex pieces of code occur?

Such issues usually stem from unfocused code (code with more than one responsibility) and a lack of abstraction: useful pieces of code are not isolated into separate functions. Instead, they are copied, leading to much duplication, which in turn causes problems with adaptability and readability.

In addition, there’s the challenge of the lack of functional test code (‘unit tests’). One reason for this lack is that AI engineers tend to rely on integration tests only, which measure the accuracy of the AI model. If the model performs poorly, it may indicate a fault. This approach has two issues:

For example, a model predicting drink sales using weather reports might score 80% accuracy. If an error causes the temperature to always read zero, the model can’t reach its full potential of a 95% score. Without proper test code, such errors remain undetected.

We see the following as underlying causes of AI/big data code quality issues:

In AI/big data systems, we usually encounter teams mainly composed of data scientists. Working with them, we observe their focus on creating functional analyses and models, often lacking in several software engineering best practices, typically leading to maintainability issues.

It’s recommended to continuously measure and improve the maintainability and test coverage of AI/big data systems, providing direct quality feedback to data science teams.

Additionally, combining data scientists with software engineers in teams can be beneficial in two ways:

It’s crucial to view AI as software with unique characteristics, as outlined in the new ISO/IEC standard 5338 for AI engineering. Instead of creating a new process, this standard builds on the existing software lifecycle framework (standard 12207).

Organizations typically have proven practices like version control, testing, DevOps, knowledge management, documentation, and architecture, which only need minor adaptations for AI. AI should also be included in security and privacy activities, like penetration testing, considering its unique challenges [2]. This inclusive approach in software engineering allows AI to responsibly grow beyond the lab and prevent a crisis.

More research findings and code examples can be found in the SIG 2023 Benchmark Report.

References:

[1] Software Engineering for Machine Learning: A Case Study”, presented at the 2019 IEEE/ACM 41st International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP) by Amershi et al. from Microsoft.

[2] For more information on AI security and privacy, refer to the OWASP AI Security & Privacy Guide: OWASP AI Security and Privacy Guide

At SIG, we specialize in software quality management solutions, empowering your organization to align business processes with technical requirements.

Discover how you can boost your software quality practices and develop top-notch AI systems with our guidance.

Author:

Senior Director, Security & Privacy and AI

Author:

Security & Privacy Consultant

We'll keep you posted on the latest news, events, and publications.