24 July 2024

Request your demo of the Sigrid® | Software Assurance Platform:

8 min read

As a software developer at Software Improvement Group (SIG), I’ve joined the Sigrid team recently, and I have witnessed first-hand how an efficient infrastructure and a team that follows the best practices in terms of development can help you grow individually as a developer while facilitating your first contributions.

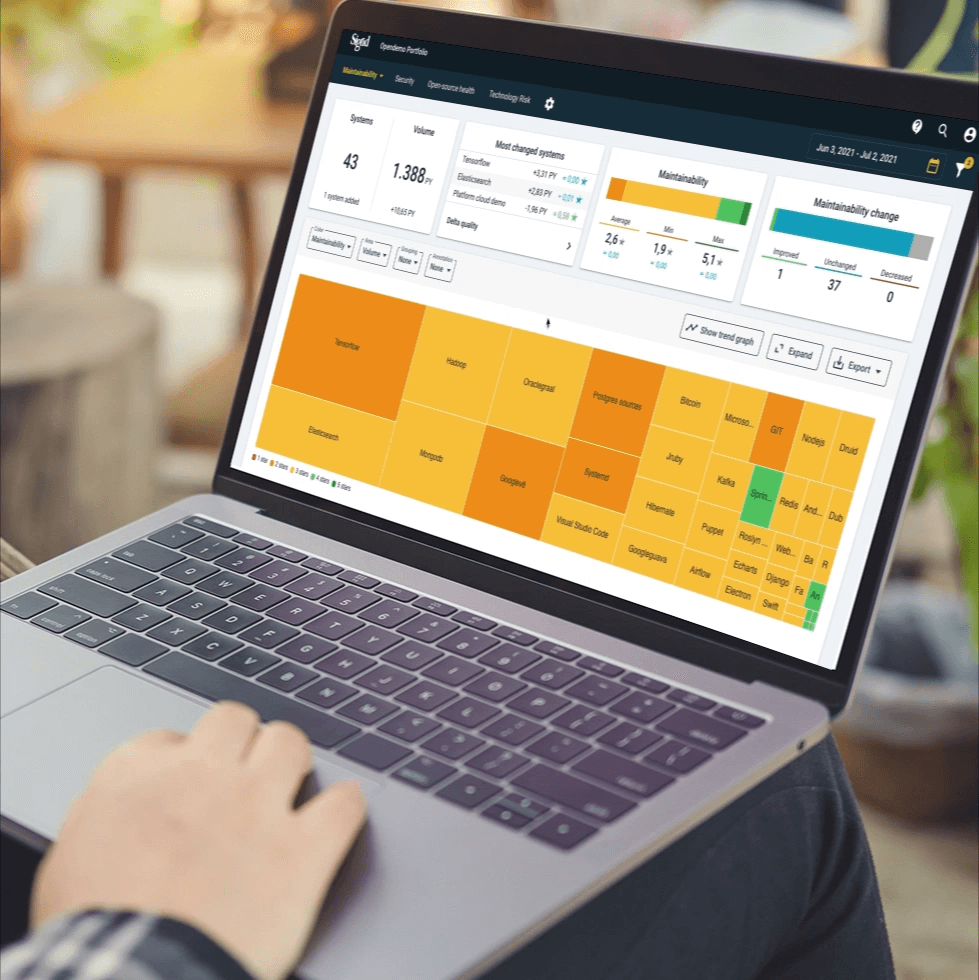

I will describe our internal process at the software development team while working in Sigrid, SIG’s software assurance platform we build and maintain ourselves; how we work together by leveraging modern tools and processes to deliver relevant features to our customers reliably and quickly. It’s a great feeling to get almost instant feedback on your work, and working in a team fosters this habit. In addition, this way of working keeps me motivated to improve our code, our processes, and my peers, all with the ultimate goal of improving our product and giving our customers the best platform they can get.

At SIG, we strive for a healthier digital world, and that starts with following best practices when it comes to writing code and enabling our clients to do the same. I’ll show you how we do CI/CD, how it helps to shape a responsibility of built-in quality within our software development team, and how it creates a healthy, high-performance environment for developers as a result.

Setting up a pipeline that enables CI/CD starts at the code architecture level. Specific architectures lend themselves easily to benefit from CI/CD, while others need profound architectural changes to start implementing best practices. Here’s an example. An architecture geared towards microservices and where the main building blocks are loosely coupled makes it easier to test and develop modules in isolation, making it much easier to apply the CI/CD principles. That is as opposed to a much more rigid structure where components have very high coupling, so it’s much harder to test things in isolation, making it hard to have a solid foundation to benefit from these principles.

At SIG, we work in an architecture that easily benefits from CI/CD and is built to do so in that manner from the ground up.

We use Java, Springboot, Maven, Hibernate, Airflow, and Docker as our main development tools, which allows us to set up a comprehensive pipeline. In addition, it gives us the confidence that our code works correctly and will keep working correctly when we make changes. The ability to get immediate feedback on every commit we make is the key that allows us to move fast and with confidence.

I’ll show you how we set up a CI/CD pipeline.

The first stage of our pipeline is the build stage. This step is where the code is compiled, unit tests are executed, and Docker images are built from the freshly compiled code and pushed to our docker registry. Of course, when we push code to our registry, the docker images will be tagged differently, depending on if we are compiling a feature branch or if the code was merged to the main branch and is about to be released to production.

A common practice here is to tag the docker images appropriately. Either with the `latest` tag, if it’s a pipeline running against the main branch and thus ready to go live into production, or with the feature branch name if it’s a side branch where a feature is built or a bug is fixed. This ensures that the latest version of the code is always available for local testing and comparison with the newest version available to customers. This is very useful in case of bug fixes or API changes requiring verification.

Also, a test data image is created for our front-end team to use to develop better the UI against a realistic dataset which gives a lot of flexibility to both teams.

It also has the added benefit that the development team faces very little to no friction when it comes to releasing new code, and at the same time, performing maintenance work or development of new features. Every commit will generate releases according to the branch against where it was performed, and developers will have independent docker images being built without interfering with each other. It’s an essential feature of a good CI/CD pipeline, and it empowers teams to work in independent sub-teams that can move fast and work on several development tasks in parallel, fostering a highly productive environment.

The integration stage is typically where integration tests (and other pipeline tasks, for example, dependency checks to assess if our code dependencies are up-to-date or not) are executed. At this stage, having a good structure in place can make a big difference in the efficiency of your pipelines. From our own experience, we observe the following:

An important aspect is ensuring that relevant test data is available for the tests. How do we do that? We take a couple of representative systems internally and dockerize them in a database dedicated container. This way, when testing our API endpoints, we effectively can test the various flows and scenarios just like they would be “exercised” when being used by actual customers. Furthermore, it gives us the added assurance that, if our pipelines succeed, there is a very high probability that the production code will work as expected for multiple scenarios.

At SIG, we use the Testcontainers library to ensure that tests are reproducible and as independent of each other as possible. This allows us to pull in the relevant Docker images in our tests and easily integrate them with Springboot. Like this, we can use Docker across our entire pipelines from development to production, which is great for consistency.

However, over time, we realized that this setup had its drawbacks. In our case, the cost started manifesting itself in an increase in pipeline execution time that was becoming too high and prevented us from keeping our agility. As our codebase grew, so did the number of tests leveraging Testcontainers, making our pipelines longer and longer. If we let the situation stay as it was, we would need more machines to distribute the load and keep the execution times under control, or we could dig deeper into Testcontainers and see whether we could gain something by adapting this framework to our needs.

We noticed that we had a lot of integration tests that were retrieving data, never changing the containers during runtime, while others were mutating the data. That meant that those forcibly needed to be recreated for every test of a similar nature.

It felt like we were onto something! There was a clear distinction on our integration tests, which we weren’t exploiting their statefulness fully. If we would bundle together tests that do not mutate data and separate those from the tests that do, we can leverage the capability of Testcontainers to reuse the containers throughout the tests, reducing our pipeline execution times considerably.

To do this at a technical level, the solution was to introduce two distinct top-level classes for our integration tests: we created a MutableEndpointsTestBase class and an ImmutableEndpointsTestBase class.

The main difference between these two classes is that the Immutable one sets the boolean flag from the testcontainer variable, the `withReuse(boolean)` flag to be true, causing the same image to be reused throughout the tests who inherit from this base class, significantly improving our pipeline execution times, without any additional changes on the surrounding infrastructure needed. This results in a significant speed gain for tests that are “immutable”, and it allows us to leverage Docker’s full potential for integration tests.

We leverage Spring RESTDocs that allows us always to keep up-to-date documentation that is programmatically generated, so the overhead for developers is minimal, and the benefits for other stakeholders are much more significant.

For cases of onboarding new developers to the team or extending our set of existing APIs, our architecture is created so that covering new code with tests is as simple as extending from base classes that encapsulate the common services and writing our custom code for the new service. Modularity and reusability for test code are essential when doing CI/CD.

New developers are also quickly brought up to speed with our standards, as we have ArchUnit tests in place. ArchUnit essentially allows us to codify “our architectural principles” into code in a way that, for example, when a developer who is unfamiliar with the codebase adds a Service in the wrong place or references a repository class from a resource class directly, the pipeline will fail and alert the developer that the code does not conform to the architectural standards in place. These tests go to the detailed level of warning for the presence of specific annotations on certain classes, which is a highly detailed overview of the architecture we use. It fosters new developers to become better developers by learning about architecture as they do their regular work, which is something not many teams can relate to.

After the compilation and successful execution of tests, the pipeline’s final stage is the production release. This stage also happens in an automated way where the newly released Docker images are picked up by Puppet after merging code to the main branch and after the pipeline passes. The services with the corresponding underlying images get automatically updated at regular, fixed intervals.

This is the Continuous Delivery aspect at SIG: a change that gets successfully merged to the main branch will automatically be deployed and visible to customers and other stakeholders without any necessary manual intervention from the developers. It helps build confidence and reliability in the team to move fast and efficiently.

To build an efficient CI/CD pipeline requires dedicated effort and code architecture, but this is possible to achieve when you follow these guidelines:

Maintaining a CI/CD pipeline aligned with your company’s vision and software development and delivery goals is a continuous process that never finishes. As your company changes, grows and adapts to market trends, it’s also necessary to ensure that the setup in place can adapt itself to these external changes to keep delivering its value.

To keep updated with modern CI/CD practices, we are now looking to leverage Kubernetes to stay in the frontline of new ways to deploy code. We are also using very modern tools during development and testing, like cdk8s, a tool supported by Amazon that programmatically generates Kubernetes deployment specs that we can then launch on a small development cluster to enable developers to perform checks and verifications against a replica of the actual production environment for an extra guarantee of code correctness from a functional perspective. Using and exploring these modern tools is extremely interesting and motivating and allows you to grow as a developer.

In my next post, I will detail how we leverage a managed Kubernetes cluster at SIG to empower our developers with environments that allow for fast testing of features against a production setup. Stay tuned!

We'll keep you posted on the latest news, events, and publications.